The is an archive of the Object Based Media group which concluded in 2020.

What is Object-Based Media, anyway?

Can the physical world be as connected, engaging, and context-aware as the world of mobile apps? We make systems that explore how sensing, understanding, and new interface technologies (particularly holography and other 3D and immersive displays) can change everyday life, the ways in which we communicate with one another, storytelling, play, and entertainment.

Current Projects

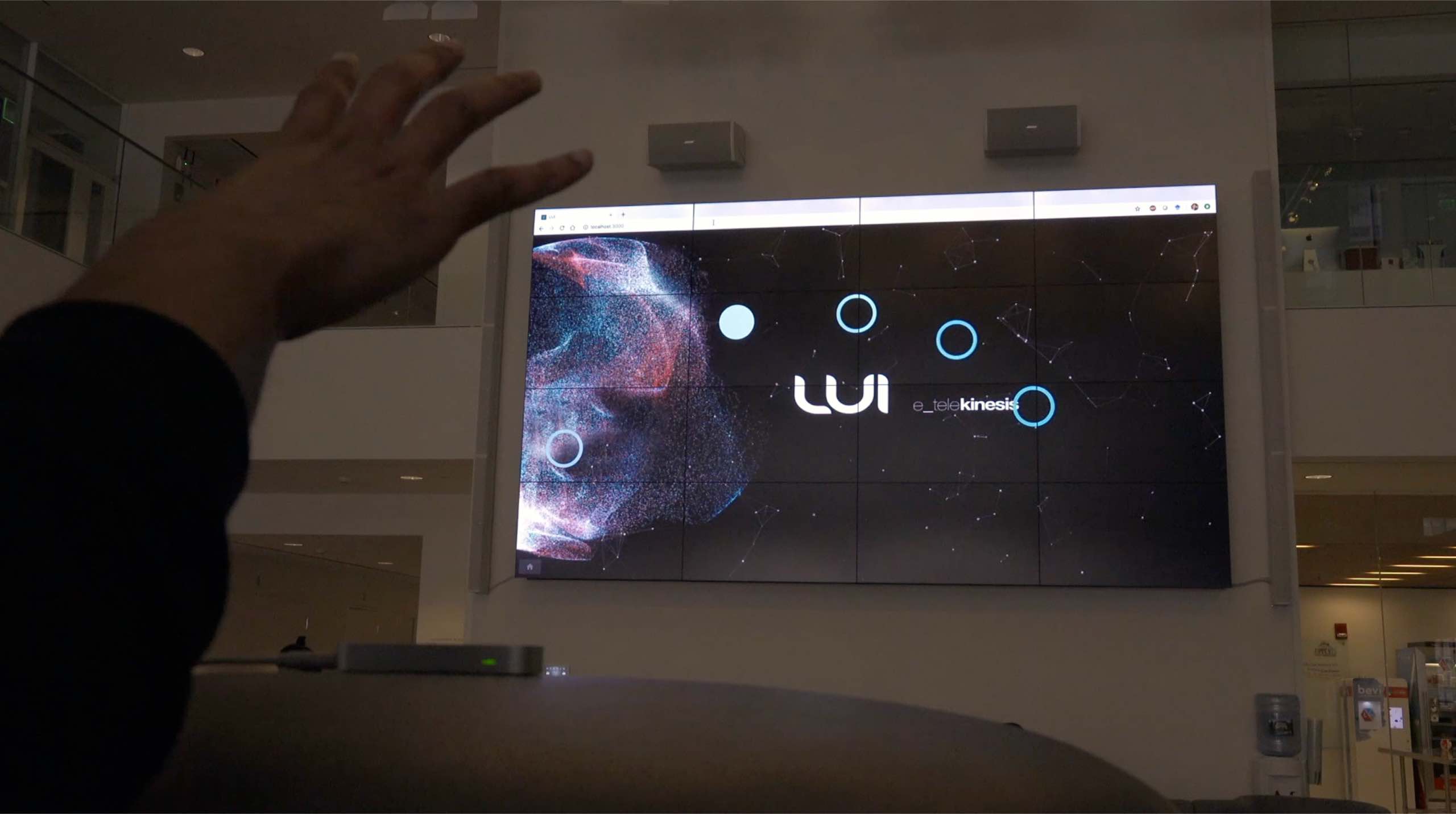

LUI (Large User Interface)

Vik Parthiban LUI is a scalable, multimodal web-interface that uses a custom framework of nondiscrete, free-handed gestures and voice to control modular applications with a single stereo-camera and voice assistant. The gestures and voice input are mapped to ReactJS web elements to provide a highly-responsive and accessible user experience. This interface can be deployed on an AR or VR system, heads-up displays for autonomous vehicles, and everyday large displays. Integrated applications include media browsing for photos and YouTube videos. Viewing and manipulating 3D models for engineering visualization are also in progress, with more applications to be added by developers in the longer-term. The LUI menu consists of a list of applications which the user can “swipe” and “airtap” to select an option. Each application has its unique set of non-discrete gestures to view and change content. If the user wants to find a specific application, they can also say a voice command to search or directly go to that application. Developers will be able to easily add more applications because of the modularity and extensibility of this web platform.

LUI is a scalable, multimodal web-interface that uses a custom framework of nondiscrete, free-handed gestures and voice to control modular applications with a single stereo-camera and voice assistant. The gestures and voice input are mapped to ReactJS web elements to provide a highly-responsive and accessible user experience. This interface can be deployed on an AR or VR system, heads-up displays for autonomous vehicles, and everyday large displays. Integrated applications include media browsing for photos and YouTube videos. Viewing and manipulating 3D models for engineering visualization are also in progress, with more applications to be added by developers in the longer-term. The LUI menu consists of a list of applications which the user can “swipe” and “airtap” to select an option. Each application has its unique set of non-discrete gestures to view and change content. If the user wants to find a specific application, they can also say a voice command to search or directly go to that application. Developers will be able to easily add more applications because of the modularity and extensibility of this web platform.

Programmable Synthetic Hallucinations

Dan Novy We are creating consumer-grade appliances and authoring methodology that allow hallucinatory phenomena to be programmed and utilized for information display and narrative storytelling. A particular technology of interest is a wearable array of small transcranial magnetic stimulation coils.

We are creating consumer-grade appliances and authoring methodology that allow hallucinatory phenomena to be programmed and utilized for information display and narrative storytelling. A particular technology of interest is a wearable array of small transcranial magnetic stimulation coils.

8K Searchin’ Safari

K. Kikuchi (NHK), V. M. Bove, Jr.

“8K Searchin’ Safari” explores the social, collaborative uses of large, high-resolution displays. Multiple users work together to explore a simulated animal habitat on a gesture-sensing 8K screen. The application highlights 8K television’s ability to show highly detailed, true-to-life imagery.

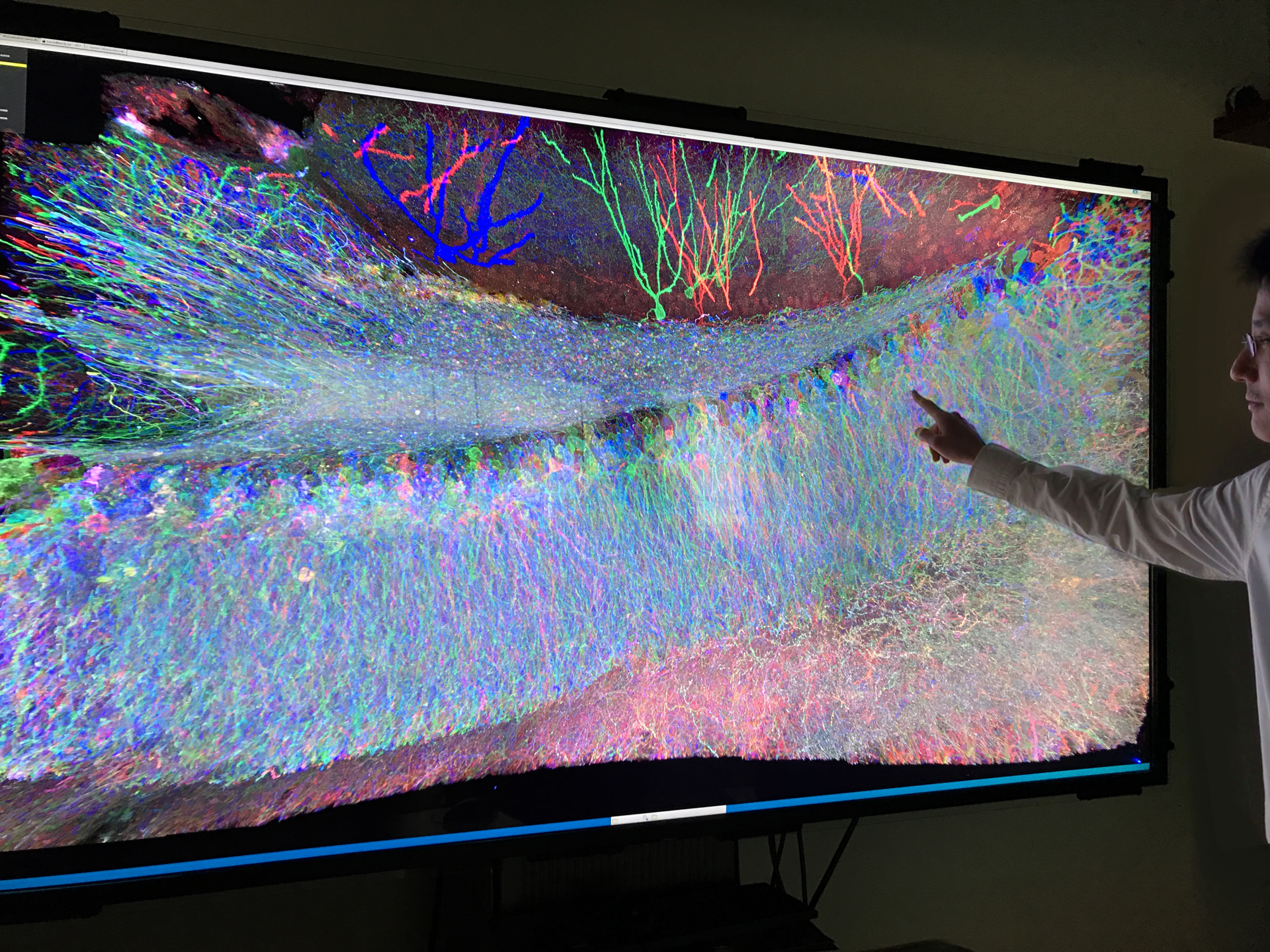

8K Brain Tour

Y. Bando (Toshiba), K. Hiwada (Toshiba), M. Kanaya (NHK), T. Ito (NHK), S. Asano (Synthetic Neurobiology Group), E. Boyden (Synthetic Neurobiology Group), V. M. Bove, Jr.

Three-dimensional visualization of microscopy images of the brain facilitates intuitive understanding of neuronal morphology and circuit connectivity. In order to demonstrate the investigation of neurons with nanoscale features that can extend over millimeters, we imaged a slice of a mouse hippocampus measuring 1.5 x 0.8 x 0.1 mm using the Expansion Microscopy technique (Science 347(6221):543-548) under a light sheet fluorescence microscope, obtaining a 4-colored, 5 TB dataset consisting of roughly 25,000 x 14,000 x 2,000 voxels at an effective imaging resolution of 60 nm via the 4.5x physical specimen expansion. The rendering speed for this dataset is 7 frames per second, allowing the user to interactively rotate and zoom into the dataset.

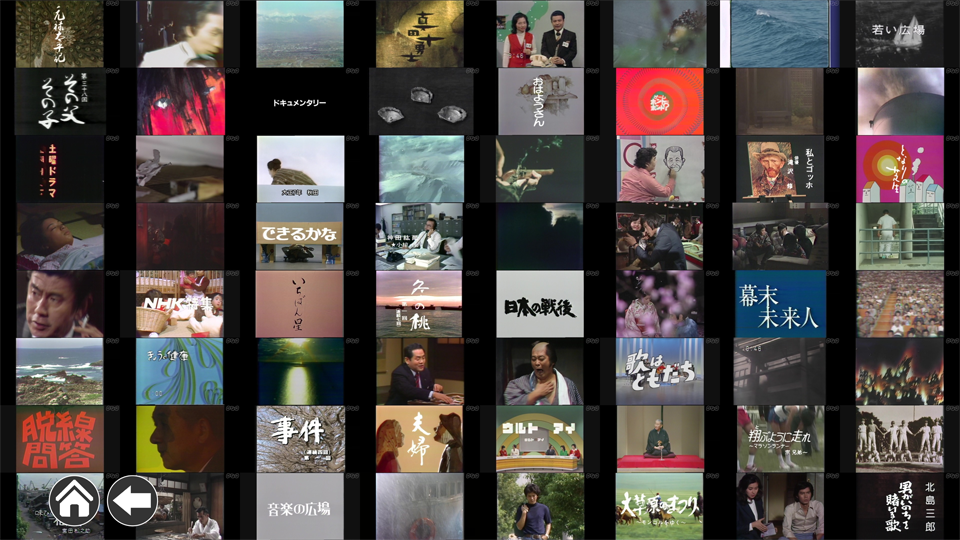

8K Time Machine

Yukiko Oshio(NHK), Hisayuki Ohmata(NHK), and V. Michael Bove, Jr. Archived TV programs evoke earlier times.This application combines a video and music archive with an immersive screen and a simple user interface suitable for everyone from children to the elderly, to create a “Time Machine” effect. The only key for exploring is the user’s age. People can enjoy over 1300 TV programs from the last seven decades without having to do tedious text searches. This is not a conventional search engine with text input, but a catalogue which intuitively guides the user only with images. The image array (64 different videos on one screen at the same time) can simplify navigation and make it immediate, rather than reference it to previous screens.

Archived TV programs evoke earlier times.This application combines a video and music archive with an immersive screen and a simple user interface suitable for everyone from children to the elderly, to create a “Time Machine” effect. The only key for exploring is the user’s age. People can enjoy over 1300 TV programs from the last seven decades without having to do tedious text searches. This is not a conventional search engine with text input, but a catalogue which intuitively guides the user only with images. The image array (64 different videos on one screen at the same time) can simplify navigation and make it immediate, rather than reference it to previous screens.

The highest resolution consumer video display (33M pixels, 7680 x 4320 = 16times that of HD), an 8K screen can show 64 simultaneous video images on one screen in SDTV quality. The high pixel density also creates printed-paper-crisp letters and no flickering- which is gentle to the user’s eyes.

4K/8K Comics

Dan Novy 4K/8K Comics applies the affordances of ultra high resolution screens to traditional print media such as comic books, graphic novels, and other sequential art forms. The comic panel becomes the entry point to the corresponding moment in the film adaptation, while scenes from the film indicate the source frames of the graphic novel. The relationship between comics, films, parodies, and other support materials can be navigated using native touch screens, gestures, or novel wireless control devices. Big Data techniques are used to sift, store, and explore vast catalogs of long running titles, enabling sharing and remixing among friends, fans, and collectors.

4K/8K Comics applies the affordances of ultra high resolution screens to traditional print media such as comic books, graphic novels, and other sequential art forms. The comic panel becomes the entry point to the corresponding moment in the film adaptation, while scenes from the film indicate the source frames of the graphic novel. The relationship between comics, films, parodies, and other support materials can be navigated using native touch screens, gestures, or novel wireless control devices. Big Data techniques are used to sift, store, and explore vast catalogs of long running titles, enabling sharing and remixing among friends, fans, and collectors.

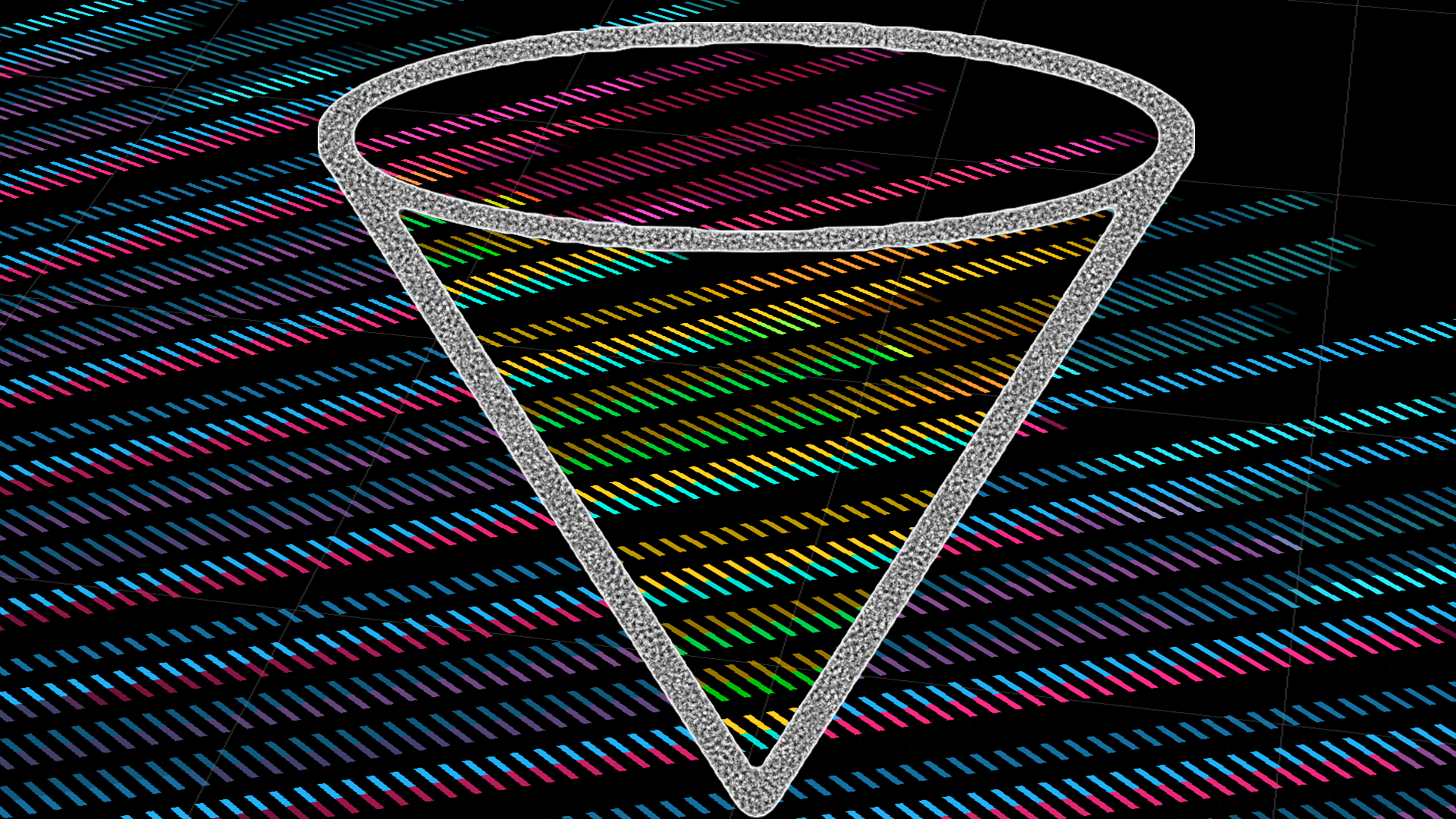

Funnel Vision

Emily Salvador Every year, we progress towards a new reality where digital content blends seamlessly with our physical world. Augmented reality (AR) headsets and volumetric displays afford us the ability to create worlds that are as rich as the movies we watch and interact with media in ways that contextualize our environments. However, AR headsets can only be experiences as an individual, and most volumetric displays typically are fragile or have reciprocating components.

Every year, we progress towards a new reality where digital content blends seamlessly with our physical world. Augmented reality (AR) headsets and volumetric displays afford us the ability to create worlds that are as rich as the movies we watch and interact with media in ways that contextualize our environments. However, AR headsets can only be experiences as an individual, and most volumetric displays typically are fragile or have reciprocating components.

Funnel Vision aims to bring 3D lightfields to the physical realm using lenticular rendering, conical reflection, and a 4K monitor. The need for inexpensive, reliable 3D, 360-degree display technologies grows as AR applications continue to increase in popularity. In real-time, it creates an AR experience that can be viewed from any angle with primarily inexpensive, readily-available components. The radial optics partitions views generated real-time in Unity, which are then reflected off a mirrored cone, to produce a volumetric image. This system can generate 16-25 discreet, autostereoscopic viewing zones that wrap 360-degrees.

Additionally, this system provides affordances not available with existing devices given that it is portable, perspective-occluding, and collaborative. I plan to create a 3D character that animates and responds in real-time based on location data, human interaction, and emotional evaluations to highlight the capabilities of this unique display. Ultimately, I hope this thesis will inspire the entertainment and consumer electronic industries to pursue this novel display technology that brings characters to life and showcases effects at the same fidelity as they exist in the digital world.

Holosuite: Interactive 3D Telepresence

Ermal Dreshaj, Sunny Jolly We are exploring holographic video as a medium for interactive telepresence. Holosuite is an application that simulates holographic rendering on advanced 3D displays, connecting remote users together where they can collaborate, visualize and share 3D information across the internet with full motion parallax and stereoscopic rendering. Using real-time CGH (computer generated holography), we can also render the likeness of a remote user with parallax and depth without the need for glasses or software head-tracking solutions, creating an immersive experience with high realism.

We are exploring holographic video as a medium for interactive telepresence. Holosuite is an application that simulates holographic rendering on advanced 3D displays, connecting remote users together where they can collaborate, visualize and share 3D information across the internet with full motion parallax and stereoscopic rendering. Using real-time CGH (computer generated holography), we can also render the likeness of a remote user with parallax and depth without the need for glasses or software head-tracking solutions, creating an immersive experience with high realism.

Holosuite Video Link

Holosuite ISDH 2015 Poster

Holographic Video: Optical Modeling and User Experience

Sunny Jolly, Andrew MacInnes, and Daniel Smalley (BYU) Holographic video work originated in the Spatial Imaging Group (using computing hardware developed by the Object-Based Media Group) moved to our lab in 2003. We are developing electro-optical technology that will enable the graphics processor in your PC or mobile device to generate holographic video images in real time on an inexpensive screen. As part of this work we are developing gigapixel-per-second light modulator chips, real-time rendering methods to generate diffraction patterns from 3-D graphics models and parallax images, and user interfaces and content for holographic television. We have also demonstrated real-time transmission of holographic video.

Holographic video work originated in the Spatial Imaging Group (using computing hardware developed by the Object-Based Media Group) moved to our lab in 2003. We are developing electro-optical technology that will enable the graphics processor in your PC or mobile device to generate holographic video images in real time on an inexpensive screen. As part of this work we are developing gigapixel-per-second light modulator chips, real-time rendering methods to generate diffraction patterns from 3-D graphics models and parallax images, and user interfaces and content for holographic television. We have also demonstrated real-time transmission of holographic video.

Cheap, color holographic video (MIT News Release)

3-D TV? How about holographic TV? (MIT News Release)

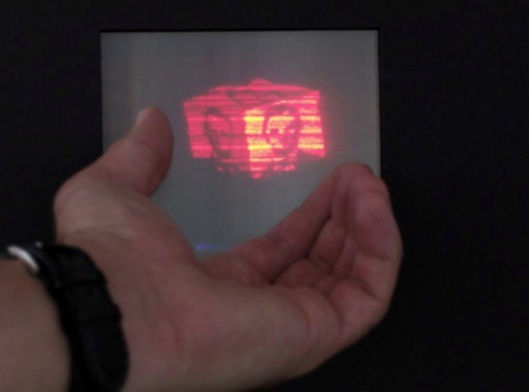

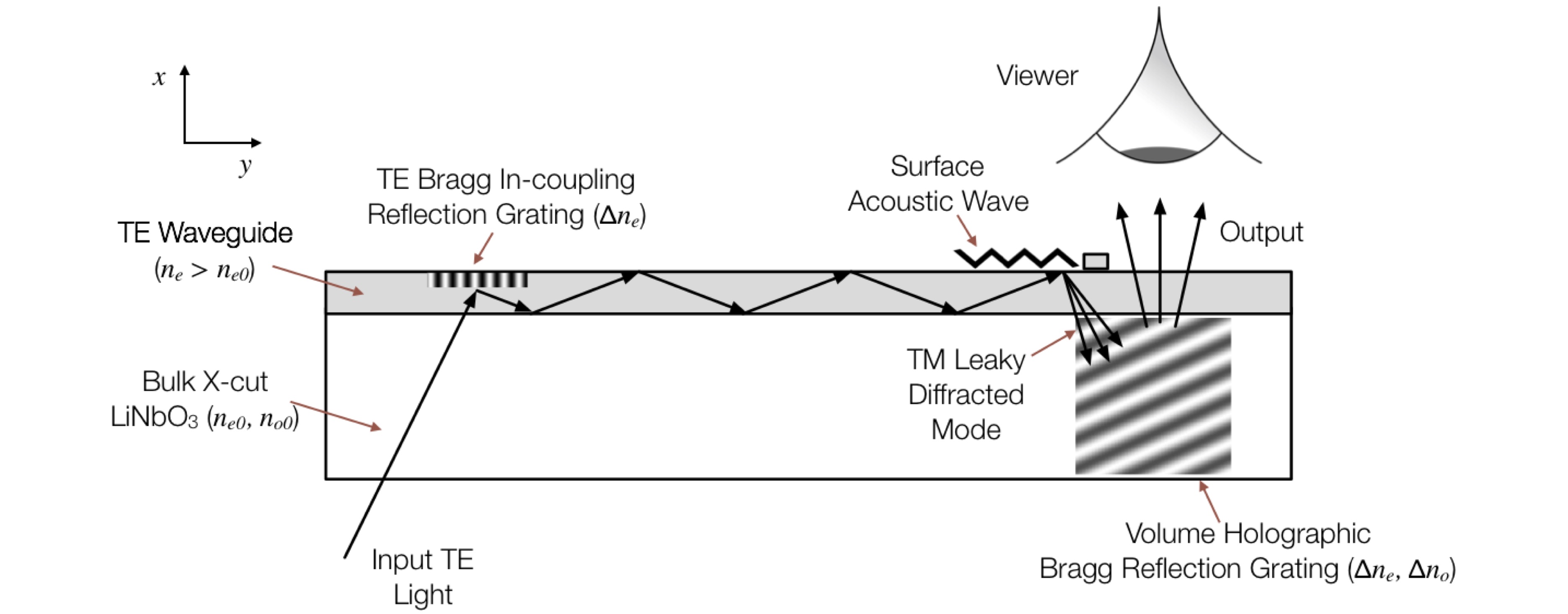

See-Through Holographic Video Display

Sunny Jolly, Nickolaos Savidis, Bianca Datta, Daniel Smalley (BYU), and V. Michael Bove, Jr. We are developing a true holographic display in the form of a transparent flat panel, suitable for wearable augmented reality applications, heads-up displays, mobile devices, and “invisible” 3D video screens. The hologram is encoded as surface acoustic waves, which outcouple the lightfield from waveguides under the surface of the panel. An additional dimension of this work is that we are developing femtosecond laser fabrication techniques that enable “printing” these devices rather than using traditional photonic device manufacturing methods.

We are developing a true holographic display in the form of a transparent flat panel, suitable for wearable augmented reality applications, heads-up displays, mobile devices, and “invisible” 3D video screens. The hologram is encoded as surface acoustic waves, which outcouple the lightfield from waveguides under the surface of the panel. An additional dimension of this work is that we are developing femtosecond laser fabrication techniques that enable “printing” these devices rather than using traditional photonic device manufacturing methods.

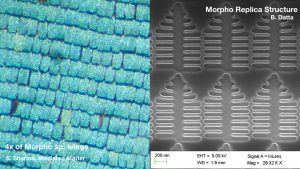

MORPHO:Bio-Inspired Photonic Materials: Producing Structurally Colored Surfaces

Bianca Datta, Sunny Jolly

Advances in science and engineering are bringing us closer and closer to systems that respond to human stimuli in real-time. Scientists often look to biology for examples of efficient, spatially tailored multifunctional systems, drawing inspiration from photonic structures like multilayer stacks like those in the Morpho butterfly. In this project, we develop an understanding of the landscape of responsive, bio-inspired, and active materials, drawing from principles of photonics and bio-inspired material systems. We are exploring various material processing techniques to produce and replicate structurally- colored surfaces, while developing simulation and modeling tools (such as inverse design processes) to generate new structures and colors. Such complex biological systems require advanced fabrication techniques. Our designs are realizable through fabrication using direct laser writing techniques such as two photon polymerization. We aim to compare our model system and simulations to fabricated structures using optical microscopy, scanning electron microscopy, and angular spectrometry. This process provides a toolkit with which to examine and build other bio-inspired, tunable, and responsive photonic systems and expand the range of achievable structural colors.

Unlike with natural structures, producing biomimetic surfaces allows researchers to test beyond tunability that occurs naturally and explore new theory and models to design structures with optimized functions. The benefits of such biomimetic nanostructures are plentiful: they provide brilliant, iridescent color with mechanical stability and light steering capabilities. By producing biomimetic nanostructures, designers and engineers can capitalize on unique properties of optical structural color, and examine these structures based on human perception and response.

Atmopragmascope

Everett Lawson A steam-powered (literally “steampunk”) volumetric display.

A steam-powered (literally “steampunk”) volumetric display.

We are surrounded by displays and technologies whose mechanisms are hidden from view. This work was an exploration of revealing the underlying mechanisms of not only how we generate and parse visual information but how it can be delivered. The design process and its outcomes are derivatives, in part, of the mechanics of the eye which guide our estimation of a “fair curve.” Working within the parameters of 19th-century tools and techniques, we adopted the perspective of research modalities relevant for a time in which the eye and low-level visual mechanisms for discerning thresholds, edges, and shapes were the dominant tools in the creation of experimentation. Modern design and engineering tools–while enabling increasingly complex and sophisticated research–can also obfuscate the fundamentals of form and function. By minimizing the influence of technological aids, we give our vision in the act of creation, the articulation of this machine intended to give form to the fundamental algorithms inherent in early biological visual processing.

Hover: A Wearable Object Identification System forAudio Augmented Reality Interactions

P. Colón-Hernández, V. M. Bove, Jr. We designed, implemented and tested a proof of concept, wrist-based wearable object identification system which allows users to “hover” their hands over objects of interest and get access to contextual information that maybe tied to them, through an intelligent personal assistant. The system uses a fusion of sensors to be able to perform the identification of an object under a variety of conditions.Among these sensors, there is a camera (operating in the visible and infrared spectrum), a small solid-state radar, and multi-spectral light spectroscopy sensors. Users can interact with contextual information tied to an object through conversations with an intelligent assistant to permit a hands-free, non-obtrusive, and discreet experience. The system explores audio interfacing with augmented reality content without the hassle of phones or head-mounted devices.

We designed, implemented and tested a proof of concept, wrist-based wearable object identification system which allows users to “hover” their hands over objects of interest and get access to contextual information that maybe tied to them, through an intelligent personal assistant. The system uses a fusion of sensors to be able to perform the identification of an object under a variety of conditions.Among these sensors, there is a camera (operating in the visible and infrared spectrum), a small solid-state radar, and multi-spectral light spectroscopy sensors. Users can interact with contextual information tied to an object through conversations with an intelligent assistant to permit a hands-free, non-obtrusive, and discreet experience. The system explores audio interfacing with augmented reality content without the hassle of phones or head-mounted devices.

AirTap

Ali Shtarbanov An array of controllable, steerable air vortex generators incorporated in the frame of a 3D video screen create strong, long-range free-space haptic feedback when a user touches a displayed object.

An array of controllable, steerable air vortex generators incorporated in the frame of a 3D video screen create strong, long-range free-space haptic feedback when a user touches a displayed object.

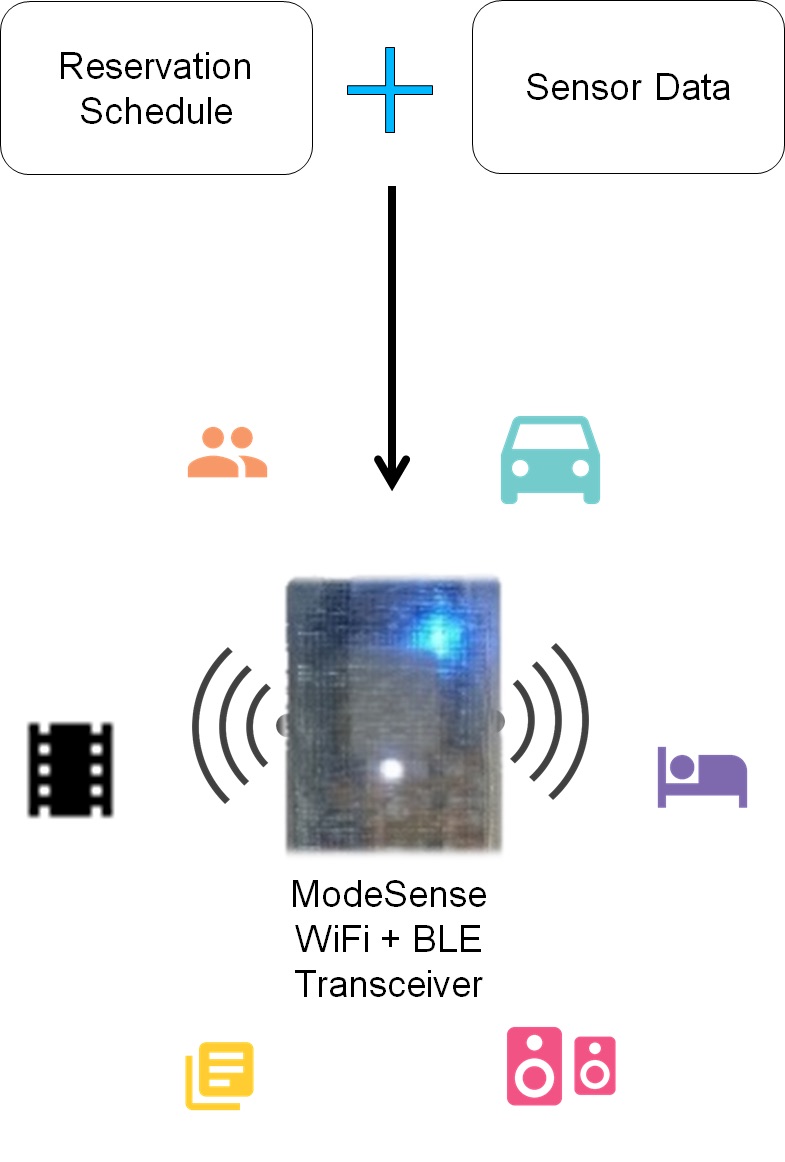

ModeSense

Ali Shtarbanov ModeSense uses room schedules, sensed contextual information, and social information to automatically configure mobile devices to an appropriate mode (Driving Mode, Theater Mode, Meeting Mode, Party Mode, etc.) for the context of the room in which the user has the device.

ModeSense uses room schedules, sensed contextual information, and social information to automatically configure mobile devices to an appropriate mode (Driving Mode, Theater Mode, Meeting Mode, Party Mode, etc.) for the context of the room in which the user has the device.

ArtBoat

Laura Perovich, OBMG UROPs, and V. Michael Bove, Jr. ArtBoat is a tool for communities to make collaborative light paintings in public space and reimagine the future of our cities. In 2018 we held ArtBoat events in Cambridge, MA, and Allston, MA, in partnership with Magazine Beach and Revels RiverSing. See our website for pictures from the events and more information about the project: artboatcommunity.com.

ArtBoat is a tool for communities to make collaborative light paintings in public space and reimagine the future of our cities. In 2018 we held ArtBoat events in Cambridge, MA, and Allston, MA, in partnership with Magazine Beach and Revels RiverSing. See our website for pictures from the events and more information about the project: artboatcommunity.com.

Photo: Jorge Valdez

Open Water Data

Laura Perovich, Sara Wylie (Northeastern University), Roseann Bongiovanni (GreenRoots), GreenRoots ECO The Open Water Data project explores data physicalization as a path to community engagement and action on important environmental issues. For our 2018 installation “Chemicals in the Creek” we released glowing lanterns representing water quality permit violations from local facilities onto the river as part of a performance of local environmental challenges that informed a community conversation on these issues. See our website for pictures from the events and more information about the project: datalanterns.com.

The Open Water Data project explores data physicalization as a path to community engagement and action on important environmental issues. For our 2018 installation “Chemicals in the Creek” we released glowing lanterns representing water quality permit violations from local facilities onto the river as part of a performance of local environmental challenges that informed a community conversation on these issues. See our website for pictures from the events and more information about the project: datalanterns.com.

Photo: Rio Asch Phoenix

Thermal Fishing Bob / SeeBoat

Laura Perovich, Sara Wylie (Northeastern University), Don Blair

Two of the most important traits of environmental hazards today are their invisibility and the fact that they are experienced by communities, not just individuals. Yet we don’t have a good way to make hazards like chemical pollution visible and intuitive. The thermal fishing bob seeks to visceralize rather than simply visualize data by creating a data experience that makes water pollution data present. The bob measures water temperature and displays that data by changing color in real time. Data is also logged to be physically displayed elsewhere and can be further recording using long exposure photos. SeeBoat implements similar sensors and displays in a fleet of radio-controlled toy boats. Making environmental data experiential and interactive will help both communities and researchers better understand pollution and its implications.

Looking Sideways Inspiration Exploration Tool

Philippa Mothersill

Looking Sideways is an online exploration tool that seeks to provoke unexpected inspiration and create new associations by providing users with a selection of semi- randomly chosen, loosely related, diverse online sources from art, design, history and literature for every search query.

Looking Sideways is an online exploration tool that seeks to provoke unexpected inspiration and create new associations by providing users with a selection of semi- randomly chosen, loosely related, diverse online sources from art, design, history and literature for every search query.

Read more here: sideways.media.mit.edu

Reframe Creative Prompt Tool

Philippa Mothersill Reframe creative prompt tool (previously design(human)design) is a computational creative prompt tool that provokes new associations between concepts in a user’s project. Using text from a designer’s own notes and readings, Reframe presents a randomised prompt, helping to juxtapose concepts in new ways.

Reframe creative prompt tool (previously design(human)design) is a computational creative prompt tool that provokes new associations between concepts in a user’s project. Using text from a designer’s own notes and readings, Reframe presents a randomised prompt, helping to juxtapose concepts in new ways.

Read more here: reframe.media.mit.edu

Design Daydreams Augmented Reality Post-it Note

Philippa Mothersill

Design Daydreams augmented drafting table projects Looking Sideways onto a table top to allow a more tangible interaction with the information being explored. Combined with a low- tech augmented reality tool that uses any mobile device in a simple holder to project digital animations on top of objects around the user, the tool allows digital and physical concepts to be overlaid on top of each other to provoke new reinterpretations and creative inspiration.

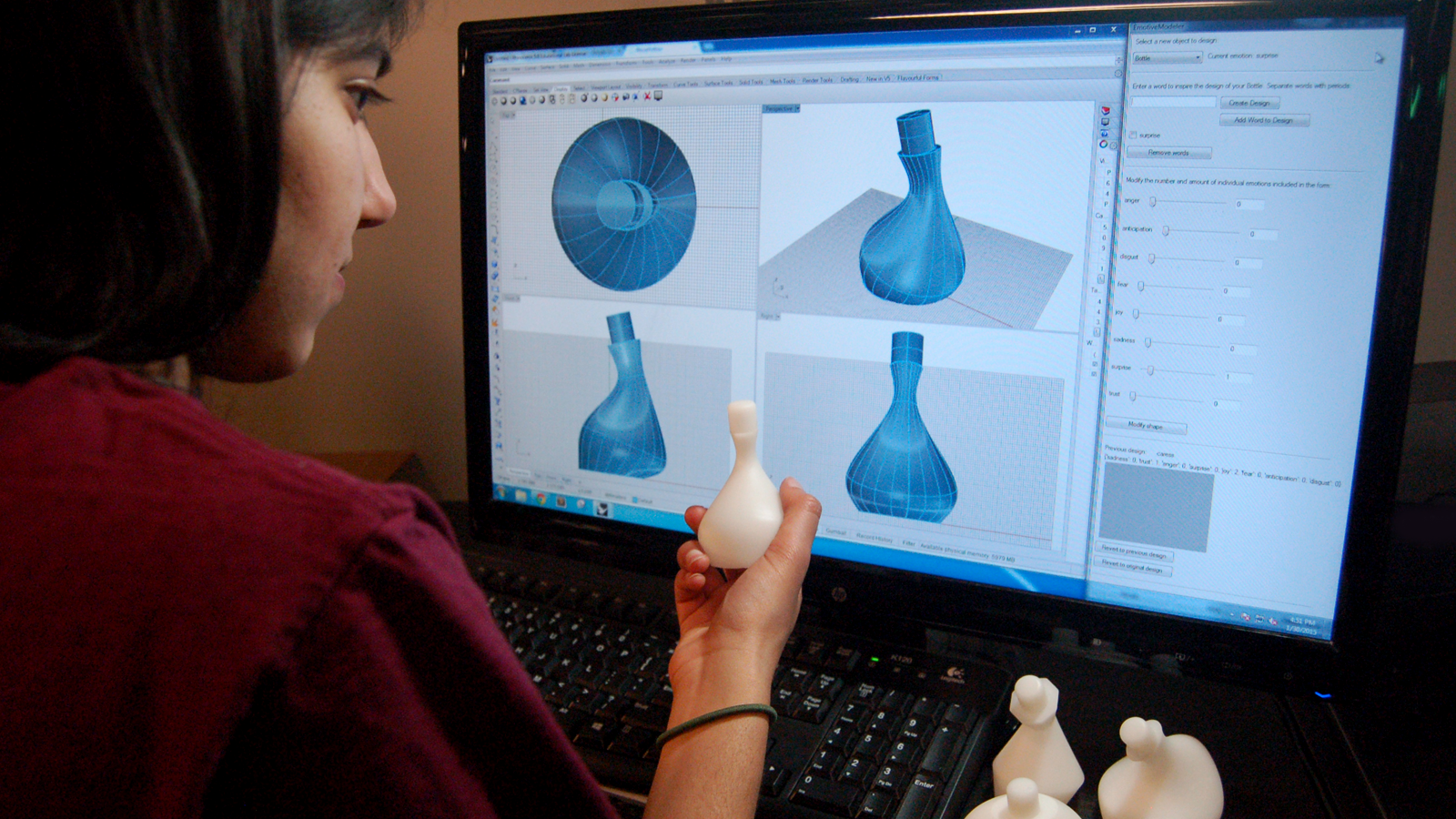

EmotiveModeler: An Emotive Form Design CAD Tool

Philippa Mothersill

Whether or not we’re experts in the design language of objects, we have an unconscious understanding of the emotional character of their forms. EmotiveModeler integrates knowledge about our emotive perception of shapes into a CAD tool that uses descriptive adjectives as an input to aid both expert and novice designers in creating objects that can communicate emotive character.

Read more here: emotivemodeler.media.mit.edu

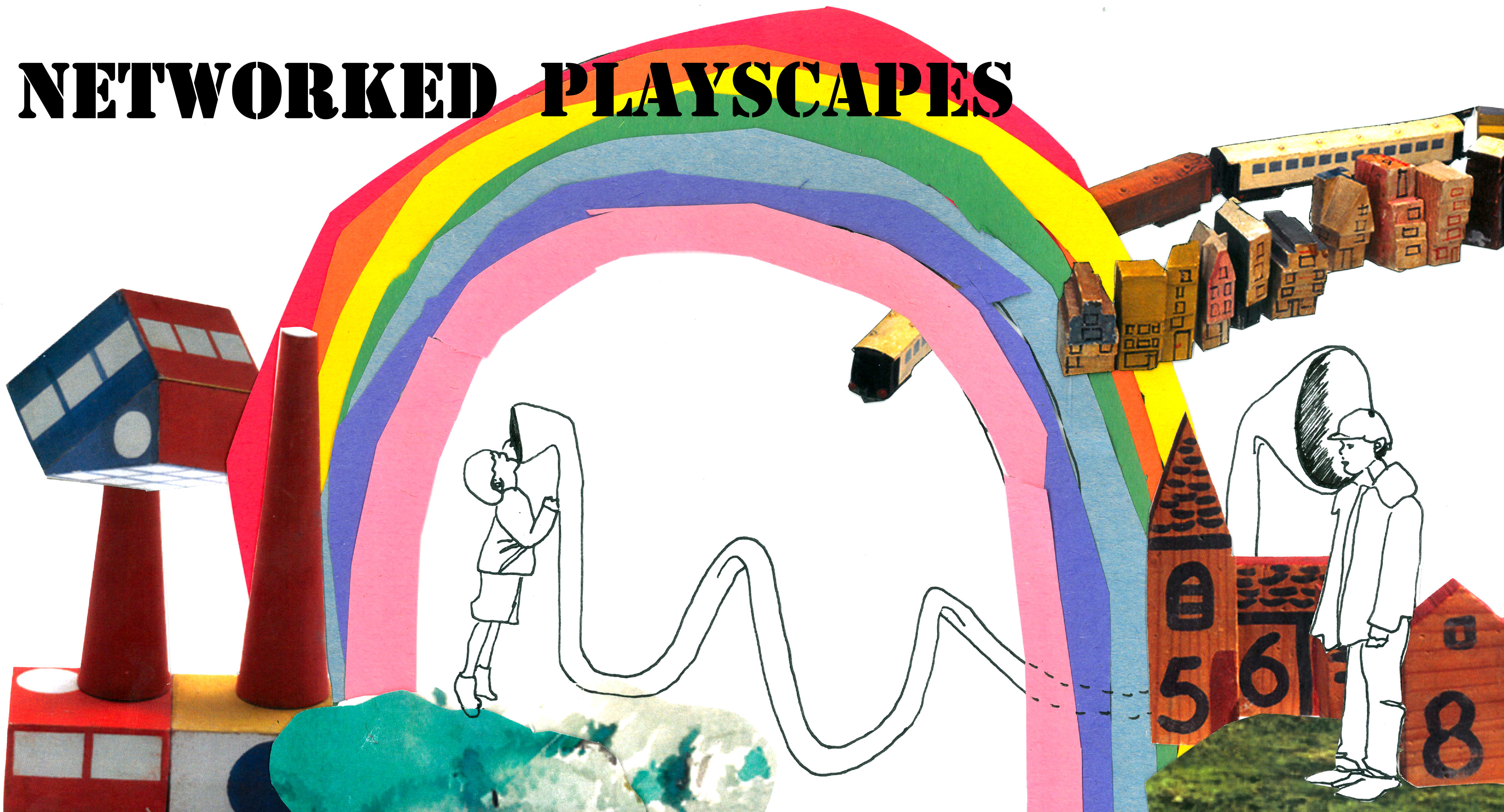

Networked Playscapes

Edwina Portocarrero Playgrounds are suffering abandonment. The pervasiveness of portable computing devices has taken over most of the “play time” of children and teens and confined it to human-screen interaction. The digital world offers wonderful possibilities the physical can’t, but we should not forget it does not substitute for the ones the physical environment possesses. As much as we can augment ourselves digitally, we can do so physically as well. Playgrounds are physical, public, ludic spaces shared by a community through generations. They can be built by anyone and out of virtually anything: every landscape, urban, rural or suburban offers a myriad of materials, known and unthought of, to be put to the service of play. Playground design has huge potential when thinking in constructivist terms and of community building, furthermore, they offer an overlooked opportunity to learn about physics and chemistry, perception and illusion, subjects not always taken into account in their design. A successful playground should involve children as co-designers, bring community together and challenge mental, social and motor skills while providing sensory stimulation and perceptual awareness. Playgrounds bring about some of the best qualities of children: their ability to befriend without profiling, opportunism nor interest. At a playground, children make friends, not connections! My interest lies in taking physical and digital and re-imagining and creating playscapes that merge the best of both worlds, engaging bodies, minds and people together.

Playgrounds are suffering abandonment. The pervasiveness of portable computing devices has taken over most of the “play time” of children and teens and confined it to human-screen interaction. The digital world offers wonderful possibilities the physical can’t, but we should not forget it does not substitute for the ones the physical environment possesses. As much as we can augment ourselves digitally, we can do so physically as well. Playgrounds are physical, public, ludic spaces shared by a community through generations. They can be built by anyone and out of virtually anything: every landscape, urban, rural or suburban offers a myriad of materials, known and unthought of, to be put to the service of play. Playground design has huge potential when thinking in constructivist terms and of community building, furthermore, they offer an overlooked opportunity to learn about physics and chemistry, perception and illusion, subjects not always taken into account in their design. A successful playground should involve children as co-designers, bring community together and challenge mental, social and motor skills while providing sensory stimulation and perceptual awareness. Playgrounds bring about some of the best qualities of children: their ability to befriend without profiling, opportunism nor interest. At a playground, children make friends, not connections! My interest lies in taking physical and digital and re-imagining and creating playscapes that merge the best of both worlds, engaging bodies, minds and people together.

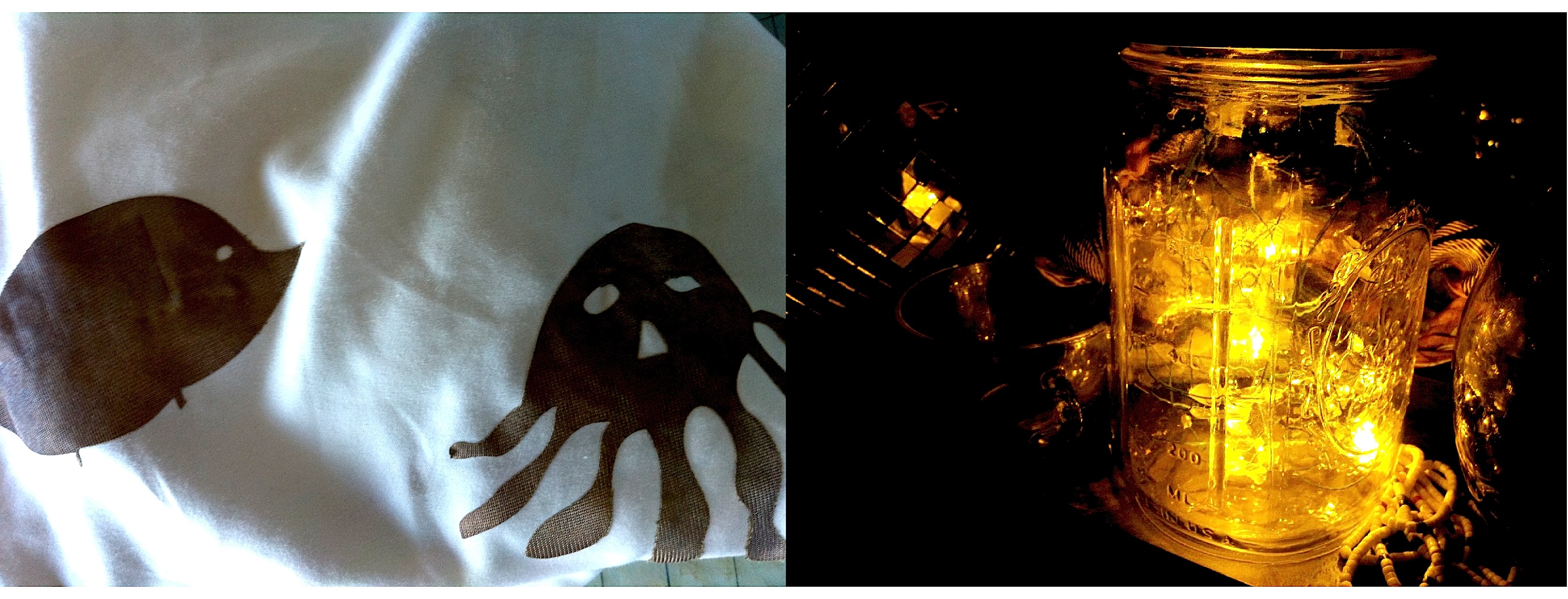

Dressed in Data

Laura Perovich

“Dressed in Data” steps beyond data visualizations to create data experiences that engage not only the analytic mind, but also the artistic and emotional self. Data is taken from a study of indoor air pollutants to create four outfits, each outfit representing findings from a particular participant and chemical class. Pieces are computationally designed and laser cut, with key attributes of the data mapped to the lace pattern. This is the first project in a series that seeks to create aesthetic data experiences that prompt researchers and laypeople to engage with information in new ways.

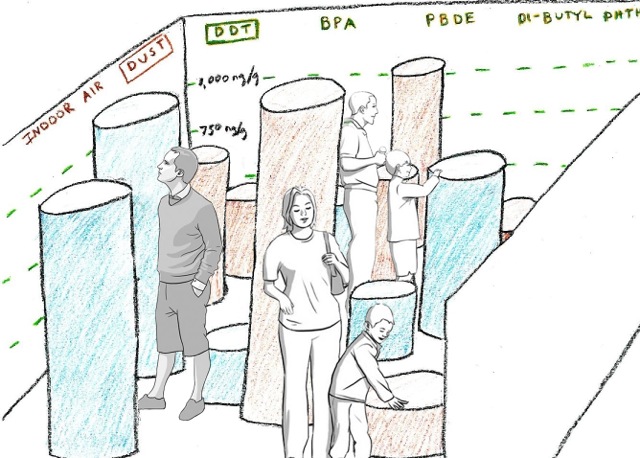

BigBarChart

Laura Perovich

BigBarChart is an immersive 3D bar chart that provides a physical way for people to interact with data. It takes data beyond visualizations to map out a new area “data experiences” which are multisensory, embodied, and aesthetic interactions.

BigBarChart is made up of a number of bars that extend up to 8′ tall to create an immersive experience. Bars change height and color and respond to interactions that are direct (e.g. person entering the room), tangible (e.g. pushing down on a bar to get meta information), or digital (e.g. controlling bars and performing statistical analyses through a tablet). BigBarChart helps both scientists and the general public understand information from a new perspective.

Radical Textiles

Laura Perovich, David Nunez, Christian Ervin This project presents a 5-year vision for Radical Textiles–fabrics with computation, sensing, and actuating seamlessly embedded in each fiber. Textiles are held close to the body–through clothing, bedding, and furniture–providing an opportunity for novel tactile interactions. We explore possible applications for Radical Textiles, propose a design framework for gestural and contextual interaction, and discuss technical advances that make this future plausible.

This project presents a 5-year vision for Radical Textiles–fabrics with computation, sensing, and actuating seamlessly embedded in each fiber. Textiles are held close to the body–through clothing, bedding, and furniture–providing an opportunity for novel tactile interactions. We explore possible applications for Radical Textiles, propose a design framework for gestural and contextual interaction, and discuss technical advances that make this future plausible.

Autostereoscopic Aerial Light-Field Display

Dan Novy Suitable for anywhere a “Pepper’s Ghost” display could be deployed, this display adds 3D with motion parallax, as well as optically relaying the image into free space such that gestural and haptic interfaces can be used to interact with it. The current version is able to display a person at approximately full-size.

Suitable for anywhere a “Pepper’s Ghost” display could be deployed, this display adds 3D with motion parallax, as well as optically relaying the image into free space such that gestural and haptic interfaces can be used to interact with it. The current version is able to display a person at approximately full-size.

“Classic” Projects

Live Objects (2013-16)

Valerio Panzica La Manna, Arata Miyamoto, and V. Michael Bove, Jr.

A Live Object consists of a new small device that can stream media content wirelessly to nearby mobile devices without an Internet connection. Live Objects are associated with real objects in the environment, such as an art piece in a museum, a statue in a public space, or a product in a store. Users exploring a space can discover nearby Live Objects and view content associated with them, as well as leave comments for future visitors. The mobile device retains a record of the media viewed (and links to additional content), while the objects can retain a record of who viewed them. Future extensions will look into making the system more social, exploring game applications such as media “scavenger hunts” built on top of the platform, and incorporating other types of media such as live and historical data from sensors associated with the objects.

Project website

DesignData Machine (2015-16)

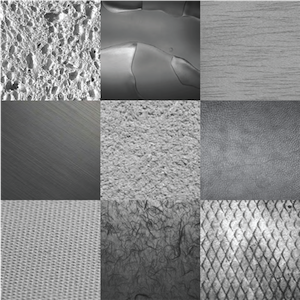

Pip Mothersill, Bianca Datta Objects can communicate a wide range of meanings to us through their form, colours, materials and so on, and can be defined by very numerical functional engineering properties, or much more tactily understood emotive artistic properties. Instead of creating ‘smooth’ forms with a certain surface function or ‘soft’ materials with a specified shore hardness like an engineer might, the designer will search for forms or materials that people perceive as embodying the more complexly emotive feeling of ‘comforting’. Unlike the many databases for scientific material properties, there exists no comprehensive database for these more experiential design properties. In our research we strive to understand and quantify these more emotively communicative aspects of the design properties of objects. The DesignData machine is a first step towards connecting these functional and emotive languages together by collecting data on the more abstract, emotive ways that people perceive and assign meaning to forms and materials. To accomplish this, massive quantities of responses of design samples must be collected in a rapid fashion from a large cross-section of society – a task that would take hundreds of hours if carried out using the more traditional in-person interview methodology. This project tackles this unique challenge of collecting large amounts of qualitative evaluation data on physical samples by providing an automated physical interface to a simple A/B comparison testing.

Objects can communicate a wide range of meanings to us through their form, colours, materials and so on, and can be defined by very numerical functional engineering properties, or much more tactily understood emotive artistic properties. Instead of creating ‘smooth’ forms with a certain surface function or ‘soft’ materials with a specified shore hardness like an engineer might, the designer will search for forms or materials that people perceive as embodying the more complexly emotive feeling of ‘comforting’. Unlike the many databases for scientific material properties, there exists no comprehensive database for these more experiential design properties. In our research we strive to understand and quantify these more emotively communicative aspects of the design properties of objects. The DesignData machine is a first step towards connecting these functional and emotive languages together by collecting data on the more abstract, emotive ways that people perceive and assign meaning to forms and materials. To accomplish this, massive quantities of responses of design samples must be collected in a rapid fashion from a large cross-section of society – a task that would take hundreds of hours if carried out using the more traditional in-person interview methodology. This project tackles this unique challenge of collecting large amounts of qualitative evaluation data on physical samples by providing an automated physical interface to a simple A/B comparison testing.

Awakened Apparel (2013)

Laura Perovich, Pip Mothersill, and Jenny Broutin Farah This project investigates soft mechanisms, origami, and fashion. We created a modified Miura fold skirt that changes shape through pneumatic actuation. In the future, our skirt could dynamically adapt to the climatic, functional and emotional needs of the user–for example, it might become shorter in warm weather, or longer if the user felt threatened.

This project investigates soft mechanisms, origami, and fashion. We created a modified Miura fold skirt that changes shape through pneumatic actuation. In the future, our skirt could dynamically adapt to the climatic, functional and emotional needs of the user–for example, it might become shorter in warm weather, or longer if the user felt threatened.

Ultra-High Tech Apparel (2013)

Philippa Mothersill, Laura Perovich, and MIT Media Lab Director’s Fellows Christopher Bevans (CBAtelier) and Philipp Schmidt

The classic lab coat has been a reliable fashion staple for scientists around the world. But Media Lab researchers are not only scientists – we are also designers, tinkerers, philosophers and artists. We need a different coat! Enter the Media Lab coat – Our lab coat is uniquely designed for, and with, the Media Lab community. It features reflective materials, new bonding techiques, and integrated electronics. One size fits One – Each Labber has different needs. Some require access to Arduinos, others need moulding materials, yet others carry around motors or smart tablets. The lab coat is a framework for customization. Ultra High Performance Lab Apparel – The coat is just the start. Together with some of the innovative member companies of the MIT Media Lab we are exploring protective eye-wear, shoe-wear and everything in between.

Digital Synesthesia (2015)

Santiago Alfaro Digital Synesthesia looks to evolve the idea of Human-Computer Interfacing and give way for Human-World Interacting. It aims to find a way for users to experience the world by perceiving information outside of their sensory capabilities. Modern technology already offers the ability to detect information from the world that is beyond our natural sensory spectrum, but what hasn’t been properly done is find the way for our brains and body to incorporate this new information as a part of our sensory toolkit, so that we can understand our surrounding world in new and undiscovered ways. The long-term vision is to give users the ability to turn senses on and off depending on the desired experience. This project is part of the Ultimate Media initiative and will be applied to the navigation and discovery of media content.

Digital Synesthesia looks to evolve the idea of Human-Computer Interfacing and give way for Human-World Interacting. It aims to find a way for users to experience the world by perceiving information outside of their sensory capabilities. Modern technology already offers the ability to detect information from the world that is beyond our natural sensory spectrum, but what hasn’t been properly done is find the way for our brains and body to incorporate this new information as a part of our sensory toolkit, so that we can understand our surrounding world in new and undiscovered ways. The long-term vision is to give users the ability to turn senses on and off depending on the desired experience. This project is part of the Ultimate Media initiative and will be applied to the navigation and discovery of media content.

Narratarium (2014)

Dan Novy, Santiago Alfaro, and members of the Digital Intuition Group Remember telling scary stories in the dark with flashlights? Narratarium is a 360-degree context aware projector, creating an immersive environment to augment stories and creative play. We are using natural language processing to listen to and understand stories being told and thematically augment the environment using images and sound. Other activities such as reading an E-book or playing with sensor-equipped toys likewise can create an appropriate projected environment, and a traveling parent can tell a story to a child at home and fill the room with images, sounds, and presence.

Remember telling scary stories in the dark with flashlights? Narratarium is a 360-degree context aware projector, creating an immersive environment to augment stories and creative play. We are using natural language processing to listen to and understand stories being told and thematically augment the environment using images and sound. Other activities such as reading an E-book or playing with sensor-equipped toys likewise can create an appropriate projected environment, and a traveling parent can tell a story to a child at home and fill the room with images, sounds, and presence.

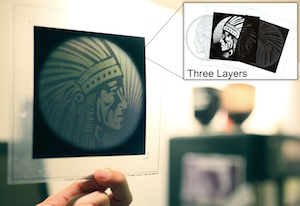

Multilayer Diffractive BxDF Displays (2014)

Sunny Jolly and members of the Camera Culture group With a wide range of applications in product design and optical watermarking, computational BxDF display has become an emerging trend in the graphics community. Existing surface-based fabrication techniques are often limited to generating only specific angular frequencies, angle-shift-invariant radiance distributions, and sometimes only symmetric BxDFs. To overcome these limitations, we propose diffractive multilayer BxDF displays. We derive forward and inverse methods to synthesize patterns that are printed on stacked, high-resolution transparencies and reproduce prescribed BxDFs with unprecedented degrees of freedom within the limits of available fabrication techniques.

With a wide range of applications in product design and optical watermarking, computational BxDF display has become an emerging trend in the graphics community. Existing surface-based fabrication techniques are often limited to generating only specific angular frequencies, angle-shift-invariant radiance distributions, and sometimes only symmetric BxDFs. To overcome these limitations, we propose diffractive multilayer BxDF displays. We derive forward and inverse methods to synthesize patterns that are printed on stacked, high-resolution transparencies and reproduce prescribed BxDFs with unprecedented degrees of freedom within the limits of available fabrication techniques.

Toward BxDF Display using Multilayer Diffraction (SIGGRAPH Asia 2014 – Project Video)

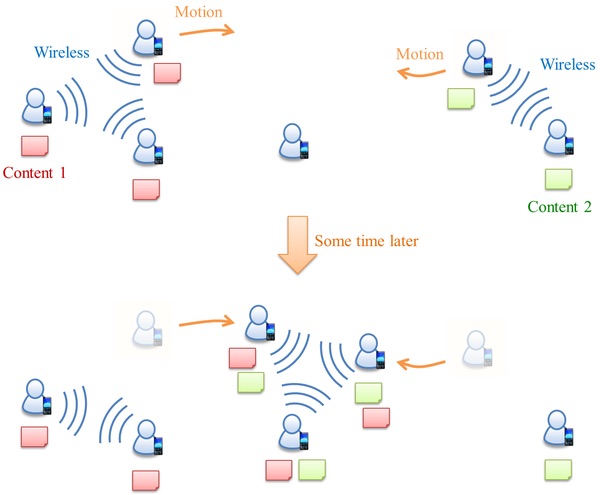

ShAir (2014)

Arata Miyamoto, Valerio Panzica La Manna, Konosuke Watanabe, Yosuke Bando, Daniel J. Dubois, V. Michael Bove, Jr. ShAir creates autonomous ad-hoc networks of mobile devices that do not rely on cellular networks or the Internet. When people move around, their phones automatically talk to other phones wirelessly when they come close to each other, and they exchange content. Content can be messages, pictures, video, emergency alerts, GPS coordinates, SOS signals, sensor readings, etc. At some point in time, Content 1 is being shared with nearby people and Content 2 by another group of people. When people move, their devices carry shared contents in the devices’ storage to another place and further share them with other people. In this way, contents can hop through people’s devices taking advantage of people’s motion and devices’ radio and storage. No infrastructure such as cell towers, Wi-Fi hotspots, or communication cables is required.

ShAir creates autonomous ad-hoc networks of mobile devices that do not rely on cellular networks or the Internet. When people move around, their phones automatically talk to other phones wirelessly when they come close to each other, and they exchange content. Content can be messages, pictures, video, emergency alerts, GPS coordinates, SOS signals, sensor readings, etc. At some point in time, Content 1 is being shared with nearby people and Content 2 by another group of people. When people move, their devices carry shared contents in the devices’ storage to another place and further share them with other people. In this way, contents can hop through people’s devices taking advantage of people’s motion and devices’ radio and storage. No infrastructure such as cell towers, Wi-Fi hotspots, or communication cables is required.

ShAir Web site

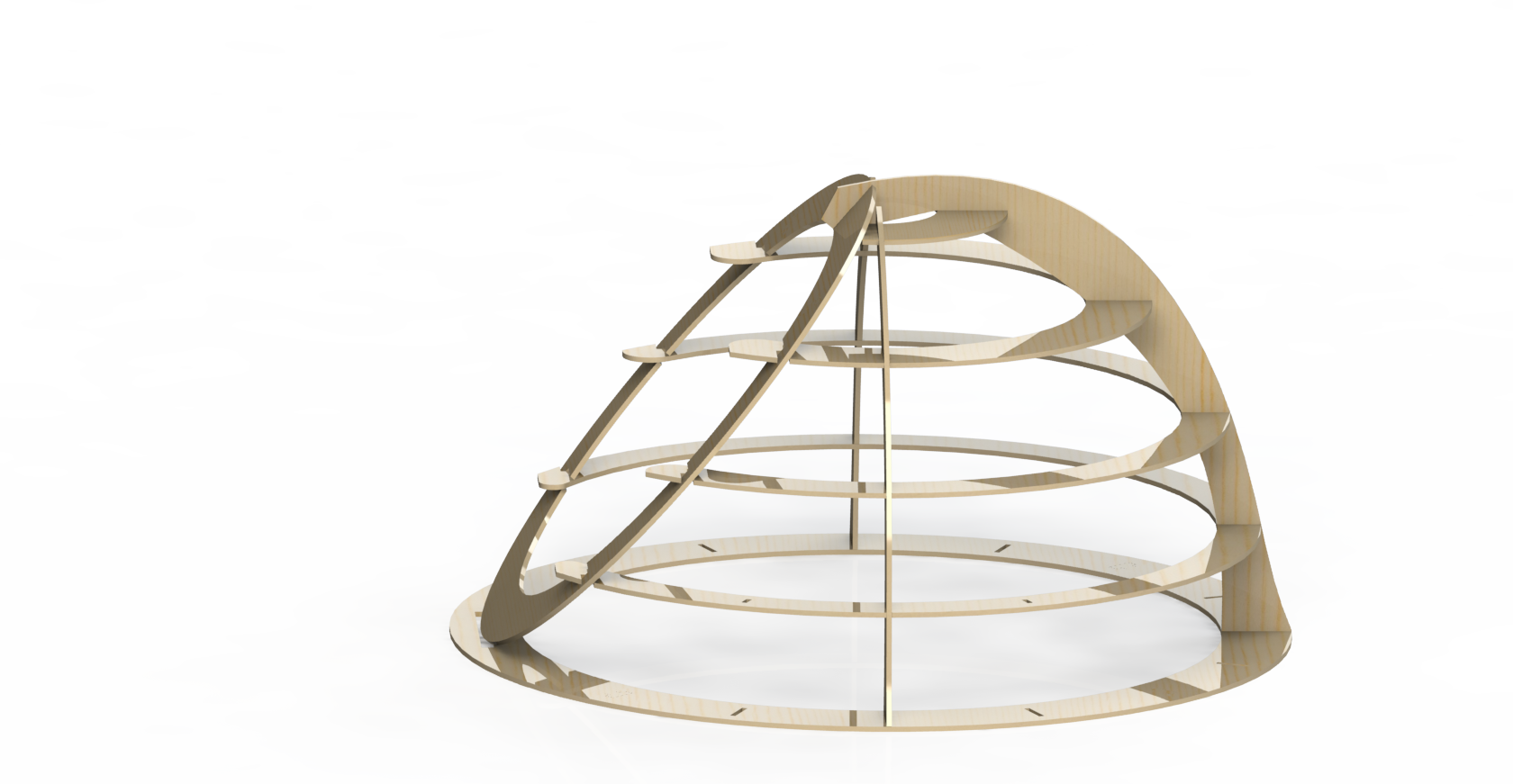

DUSK (2014)

Bianca Datta, Ermal Dreshaj DUSK was created as part of the MIT Media Lab Wellness Initiative (a Robert Wood Johnson Foundation grant), to create private, restful spaces for people at the workplace. DUSK promotes a vision of a new type of “nap pod”, where workers are encouraged to use the structure for regular breaks and meditation on a daily basis. The user is provided the much-needed privacy to take a phone call, focus, or rest inside the pod for short periods during the day. The inside can be silent, or filled by binaural beats audio, pitch black, or illuminated by a sunlamp–whatever works for the user to get that necessary rest and relaxation so they can continue to be healthy and productive. DUSK is created with a parametric press-fit design, making it scalable and suitable for fabrication customizable on a per-user basis.

DUSK was created as part of the MIT Media Lab Wellness Initiative (a Robert Wood Johnson Foundation grant), to create private, restful spaces for people at the workplace. DUSK promotes a vision of a new type of “nap pod”, where workers are encouraged to use the structure for regular breaks and meditation on a daily basis. The user is provided the much-needed privacy to take a phone call, focus, or rest inside the pod for short periods during the day. The inside can be silent, or filled by binaural beats audio, pitch black, or illuminated by a sunlamp–whatever works for the user to get that necessary rest and relaxation so they can continue to be healthy and productive. DUSK is created with a parametric press-fit design, making it scalable and suitable for fabrication customizable on a per-user basis.

LIMBO (2014)

Ermal Dreshaj, Sang-Won Leigh (Fluid Interfaces Group), Artem Dementyev (Responsive Environments Group) Project LIMBO, standing for Limbs In Motion By Others, aims to create technologies to support people who have lost the ability to control a certain part of their body, or who are attempting sophisticated tasks beyond their capabilities. Our strategy is use a functional electrical stimulation (or FES) as a mean for direct control/feedback on muscle activities, for reprogramming the way human body parts are controlled. We envision scenarios of using muscle stimulation for extending motor-control capability or giving feedbacks for adjusting body motions. For example, paralyzed people could regain the experience of grasping with their hands by actuating hand muscles based on gaze gestures. People who have lost leg control could control their legs with finger movement – and be able to drive a car without special assist. LIMBO has been a featured demonstration in workshops at SXS 2014 and CHI 2014.

Project LIMBO, standing for Limbs In Motion By Others, aims to create technologies to support people who have lost the ability to control a certain part of their body, or who are attempting sophisticated tasks beyond their capabilities. Our strategy is use a functional electrical stimulation (or FES) as a mean for direct control/feedback on muscle activities, for reprogramming the way human body parts are controlled. We envision scenarios of using muscle stimulation for extending motor-control capability or giving feedbacks for adjusting body motions. For example, paralyzed people could regain the experience of grasping with their hands by actuating hand muscles based on gaze gestures. People who have lost leg control could control their legs with finger movement – and be able to drive a car without special assist. LIMBO has been a featured demonstration in workshops at SXS 2014 and CHI 2014.

LIMBO: Sci-fi 2 Sci-fab Page

LIMBO: Fluid Interfaces landing page

Bottles&Boxes (2014)

Ermal Dreshaj, Dan Novy Bottles&Boxes uses optical sensors to determine which bottle is placed into which slot. This work was done in collaboration with Natura, in an effort to better understand how people use products at home. Bottles&Boxes allows tracking usage as well as ranking the order of preference of products based on the order in which they are replaced in a box, with information reported wirelessly for analysis. With Bottles&Boxes, we envision a scenario where users are able to give feedback about a product to a company in real time, which allows for better iterative design, anticipating market demands and the needs of users.

Bottles&Boxes uses optical sensors to determine which bottle is placed into which slot. This work was done in collaboration with Natura, in an effort to better understand how people use products at home. Bottles&Boxes allows tracking usage as well as ranking the order of preference of products based on the order in which they are replaced in a box, with information reported wirelessly for analysis. With Bottles&Boxes, we envision a scenario where users are able to give feedback about a product to a company in real time, which allows for better iterative design, anticipating market demands and the needs of users.

Ambi-Blinds (2014)

Ermal Dreshaj Ambi-blinds are solar-powered, sunlight-driven window blinds. A reinvention of a common household item, Ambi-blinds use the level of sunlight striking the window to automatically control the tilt of the blinds, effectively controlling how much sunlight is cast into a room depending on time of day. Sleep studies dictate that waking up with the sunlight regularly promotes wellness and quality of sleep, regulating our circadian rhythm throughout the day. By automatically regulating the user’s exposure to sunlight, Ambi-blinds promote the well-being of the user in a non-invasive way, and close at night to allow for privacy.

Ambi-blinds are solar-powered, sunlight-driven window blinds. A reinvention of a common household item, Ambi-blinds use the level of sunlight striking the window to automatically control the tilt of the blinds, effectively controlling how much sunlight is cast into a room depending on time of day. Sleep studies dictate that waking up with the sunlight regularly promotes wellness and quality of sleep, regulating our circadian rhythm throughout the day. By automatically regulating the user’s exposure to sunlight, Ambi-blinds promote the well-being of the user in a non-invasive way, and close at night to allow for privacy.

Creating Ambi-blinds: How To Make Documentation

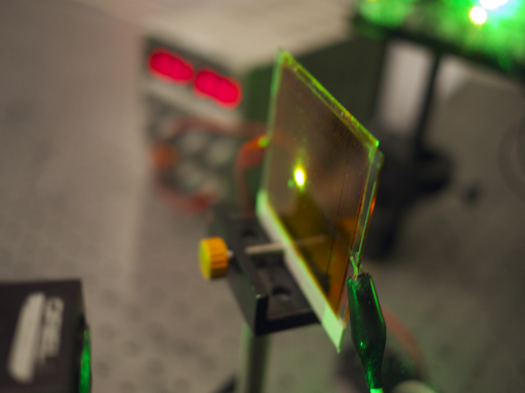

Direct Fringe Writing of Computer-Generated Holograms (2013)

Sunny Jolly Photorefractive polymer has many attractive properties for dynamic holographic displays; however, the current display systems based around its use involve generating holograms by optical interference methods that complicate the optical and computational architectures of the systems and limit the kinds of holograms that can be displayed. We are developing a system to write computer-generated diffraction fringes directly from spatial light modulators to photorefractive polymers, resulting in displays with reduced footprint and cost, and potentially higher perceptual quality.

Photorefractive polymer has many attractive properties for dynamic holographic displays; however, the current display systems based around its use involve generating holograms by optical interference methods that complicate the optical and computational architectures of the systems and limit the kinds of holograms that can be displayed. We are developing a system to write computer-generated diffraction fringes directly from spatial light modulators to photorefractive polymers, resulting in displays with reduced footprint and cost, and potentially higher perceptual quality.

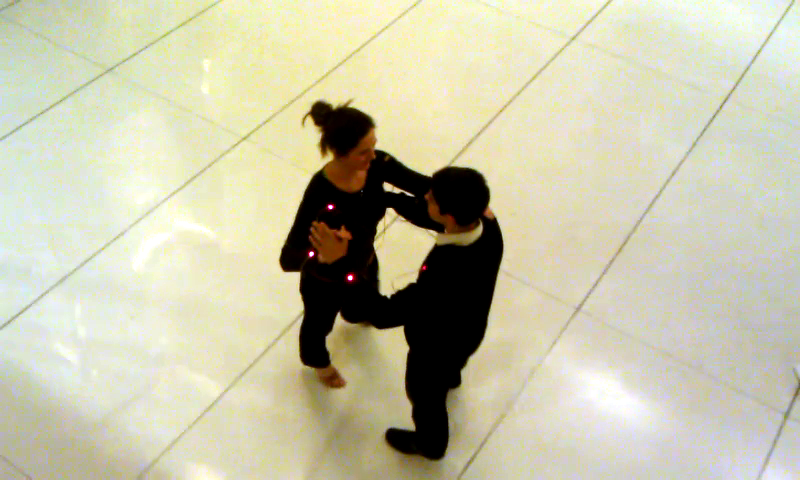

Motion Moderator (2013)

Laura Perovich Learning to dance is a kinetic experience that can be enhanced through strengthening the connection between teachers, students, and dance partners. This project seeks to increase communication by collecting movement information and displaying it immediately through input and output devices in shoes and clothing. This playful feedback re-imagines the process of dance practice and education.

Learning to dance is a kinetic experience that can be enhanced through strengthening the connection between teachers, students, and dance partners. This project seeks to increase communication by collecting movement information and displaying it immediately through input and output devices in shoes and clothing. This playful feedback re-imagines the process of dance practice and education.

Infinity-by-Nine (2013)

Dan Novy Infinity-by-Nine augments the traditional home theater or other viewing environment by immersing the viewer in a three-dimensional ensemble of imagery generated by analyzing an existing video stream. The system uses optical flow, color analysis, and pattern-aware out-painting algorithms to create a synthetic light field beyond the screen edge and projects it onto walls, ceiling, or other suitable surfaces within the viewerís peripheral awareness. Infinity-by-Nine takes advantage of the lack of detail and different neural processing in the peripheral region of the eye. Users perceive the scene-consistent, low-resolution color, light, and movement patterns projected into their peripheral vision as a seamless extension of the primary content.

Infinity-by-Nine augments the traditional home theater or other viewing environment by immersing the viewer in a three-dimensional ensemble of imagery generated by analyzing an existing video stream. The system uses optical flow, color analysis, and pattern-aware out-painting algorithms to create a synthetic light field beyond the screen edge and projects it onto walls, ceiling, or other suitable surfaces within the viewerís peripheral awareness. Infinity-by-Nine takes advantage of the lack of detail and different neural processing in the peripheral region of the eye. Users perceive the scene-consistent, low-resolution color, light, and movement patterns projected into their peripheral vision as a seamless extension of the primary content.

Project Video (Media Lab LabCAST)

3-D Telepresence Chair (2013)

Dan Novy An autostereoscopic (no glasses) 3D display engine is combined with a “Pepper’s Ghost” setup to create an office chair that appears to contain a remote meeting participant. The system geometry is also suitable for other applications such as tabletop displays or automotive heads-up displays.

An autostereoscopic (no glasses) 3D display engine is combined with a “Pepper’s Ghost” setup to create an office chair that appears to contain a remote meeting participant. The system geometry is also suitable for other applications such as tabletop displays or automotive heads-up displays.

Simple Spectral Sensing (2012)

Andy Bardagjy The availability of cheap LED’s and diode lasers in a variety of wavelengths enables the creation of simple and cheap spectroscopic sensors for specific tasks such as food shopping and preparation, healthcare sensing, material identification and detection of contaminants or adulterants. This enables application in food safety, health and wellness, sports, education among others. Since the sensors are specialized, they intrinsically are lower cost, lower power, have a higher signal-to-noise ratio and have a reduced form factor

The availability of cheap LED’s and diode lasers in a variety of wavelengths enables the creation of simple and cheap spectroscopic sensors for specific tasks such as food shopping and preparation, healthcare sensing, material identification and detection of contaminants or adulterants. This enables application in food safety, health and wellness, sports, education among others. Since the sensors are specialized, they intrinsically are lower cost, lower power, have a higher signal-to-noise ratio and have a reduced form factor

SlamForceNet (2012)

Dan Novy and Santiago Alfaro An intelligent basketball net (which visually and behaviorally matches a standard NBA net) can measure the energy behind a dunked basketball. Its first public appearance was in the 2012 NBA Slam Dunk Competition. Project video

An intelligent basketball net (which visually and behaviorally matches a standard NBA net) can measure the energy behind a dunked basketball. Its first public appearance was in the 2012 NBA Slam Dunk Competition. Project video

Calliope (2011)

Edwina Portocarrero Calliope builds on the idea of documentation as a valuable asset to learning. Calliope displays the modifications or “history” of every page by placing the corresponding tag. It also allows for multiple “layers” to be displayed at once, so the user can build up on their previous work or start from scratch. Its tags are human readable and can be drawn directly onto the pages of the sketchbook. Calliope also adds the ability to record sound on each page.

Calliope builds on the idea of documentation as a valuable asset to learning. Calliope displays the modifications or “history” of every page by placing the corresponding tag. It also allows for multiple “layers” to be displayed at once, so the user can build up on their previous work or start from scratch. Its tags are human readable and can be drawn directly onto the pages of the sketchbook. Calliope also adds the ability to record sound on each page.

Surround Vision (2011)

Santiago Alfaro We are exploring technical and creative implications of using a mobile phone or tablet (and possibly also dedicated devices like toys) as a controllable “second screen” for enhancing television viewing. Thus a viewer could use the phone to look beyond the edges of the television to see the audience for a studio-based program, to pan around a sporting event, to take snapshots for a scavenger hunt, or to simulate binoculars to zoom in on a part of the scene.

We are exploring technical and creative implications of using a mobile phone or tablet (and possibly also dedicated devices like toys) as a controllable “second screen” for enhancing television viewing. Thus a viewer could use the phone to look beyond the edges of the television to see the audience for a studio-based program, to pan around a sporting event, to take snapshots for a scavenger hunt, or to simulate binoculars to zoom in on a part of the scene.

The NeverEnding Drawing Machine (2011)

Edwina Portocarrero, David Robert,Sean Follmer, Michelle Chung The Never-Ending Drawing Machine (NEDM), a portable stage for collaborative, cross-cultural, crossgenerational storytelling, integrates a paper-based tangible interface with a computing platform in order to emphasize the social experience of sharing object-based media with others. Incorporating analog and digital techniques as well as bi-directional capture and transmission of media, it offers co-creation among peers whose expertise may not necessarily be in the same medium, extending the possibility of integrating objects as objects, as characters or as backgrounds. Calliope is a newer, portable version of the system. Project video

The Never-Ending Drawing Machine (NEDM), a portable stage for collaborative, cross-cultural, crossgenerational storytelling, integrates a paper-based tangible interface with a computing platform in order to emphasize the social experience of sharing object-based media with others. Incorporating analog and digital techniques as well as bi-directional capture and transmission of media, it offers co-creation among peers whose expertise may not necessarily be in the same medium, extending the possibility of integrating objects as objects, as characters or as backgrounds. Calliope is a newer, portable version of the system. Project video

Gamelan Headdresses (2011)

Edwina Portocarrero, Jesse Gray Blending tradition and technology, this headdresses aim to illustrate “Galak Tika”, Bahasa Kawi (classical Javanese, Sanskrit dialect) meaning “intense togetherness.” Considering the cultural,ritualistic and performative aspects of Gamelan music, they are at once rooted through material, shape and color in tradition while allowing the audience to visualize the complex rhythmic interlocking or “kotekan” that makes Gamelan music unique by incorporating electroluminescent wire. The headdresses are wireless, have a long battery life and are robust and lightweight. They are pretty flexible in their control, allowing MIDI, OSC or direct audience participation through an API.

Blending tradition and technology, this headdresses aim to illustrate “Galak Tika”, Bahasa Kawi (classical Javanese, Sanskrit dialect) meaning “intense togetherness.” Considering the cultural,ritualistic and performative aspects of Gamelan music, they are at once rooted through material, shape and color in tradition while allowing the audience to visualize the complex rhythmic interlocking or “kotekan” that makes Gamelan music unique by incorporating electroluminescent wire. The headdresses are wireless, have a long battery life and are robust and lightweight. They are pretty flexible in their control, allowing MIDI, OSC or direct audience participation through an API.

Pillow-Talk (2010)

Edwina Portocarrero, David Cranor Pillow-Talk is designed to aid creative endeavors through the unobtrusive acquisition of unconscious self-generated content to permit reflexive self-knowledge. Composed of a seamless voice-recording device embedded in a pillow, pillow-talk captures that which we normally forget. This allows users to record their dreams in a less mediated way, aiding recollection by priming the experience and providing no distraction for recall and capture through embodied interaction. The Jar is a simple Mason jar with amber colored LED’s dangling inside it, evocative of fireflies. The neck of the Jar encloses the Animator: it incorporates data storage, sound playback, 16-channel PWM LED control and wireless communication via an Xbee radio into a single small board.

Pillow-Talk is designed to aid creative endeavors through the unobtrusive acquisition of unconscious self-generated content to permit reflexive self-knowledge. Composed of a seamless voice-recording device embedded in a pillow, pillow-talk captures that which we normally forget. This allows users to record their dreams in a less mediated way, aiding recollection by priming the experience and providing no distraction for recall and capture through embodied interaction. The Jar is a simple Mason jar with amber colored LED’s dangling inside it, evocative of fireflies. The neck of the Jar encloses the Animator: it incorporates data storage, sound playback, 16-channel PWM LED control and wireless communication via an Xbee radio into a single small board.

Touch-related interactions:

Shake-on-it (2010)

David Cranor ShakeOnIt is a project which explores interaction modalities made possible by multi person gestures. It was developed as part of Hiroshi Ishii’s Tangible Interfaces class. My team and I created a pair of gloves which detect a series of gestures that are part of a “secret handshake.” This method of authentication recognition encodes the process of supplying credentials to the system in a widely accepted social ritual, the handshake. The performance of the handshake becomes something more than simply giving someone a password; by its very nature, the system actually tests that the people using it have practiced the series of gestures together enough times to become proficient enough to perform the series of gestures successfully. This allows the system to test not only credentials, but social familiarity.

ShakeOnIt is a project which explores interaction modalities made possible by multi person gestures. It was developed as part of Hiroshi Ishii’s Tangible Interfaces class. My team and I created a pair of gloves which detect a series of gestures that are part of a “secret handshake.” This method of authentication recognition encodes the process of supplying credentials to the system in a widely accepted social ritual, the handshake. The performance of the handshake becomes something more than simply giving someone a password; by its very nature, the system actually tests that the people using it have practiced the series of gestures together enough times to become proficient enough to perform the series of gestures successfully. This allows the system to test not only credentials, but social familiarity.

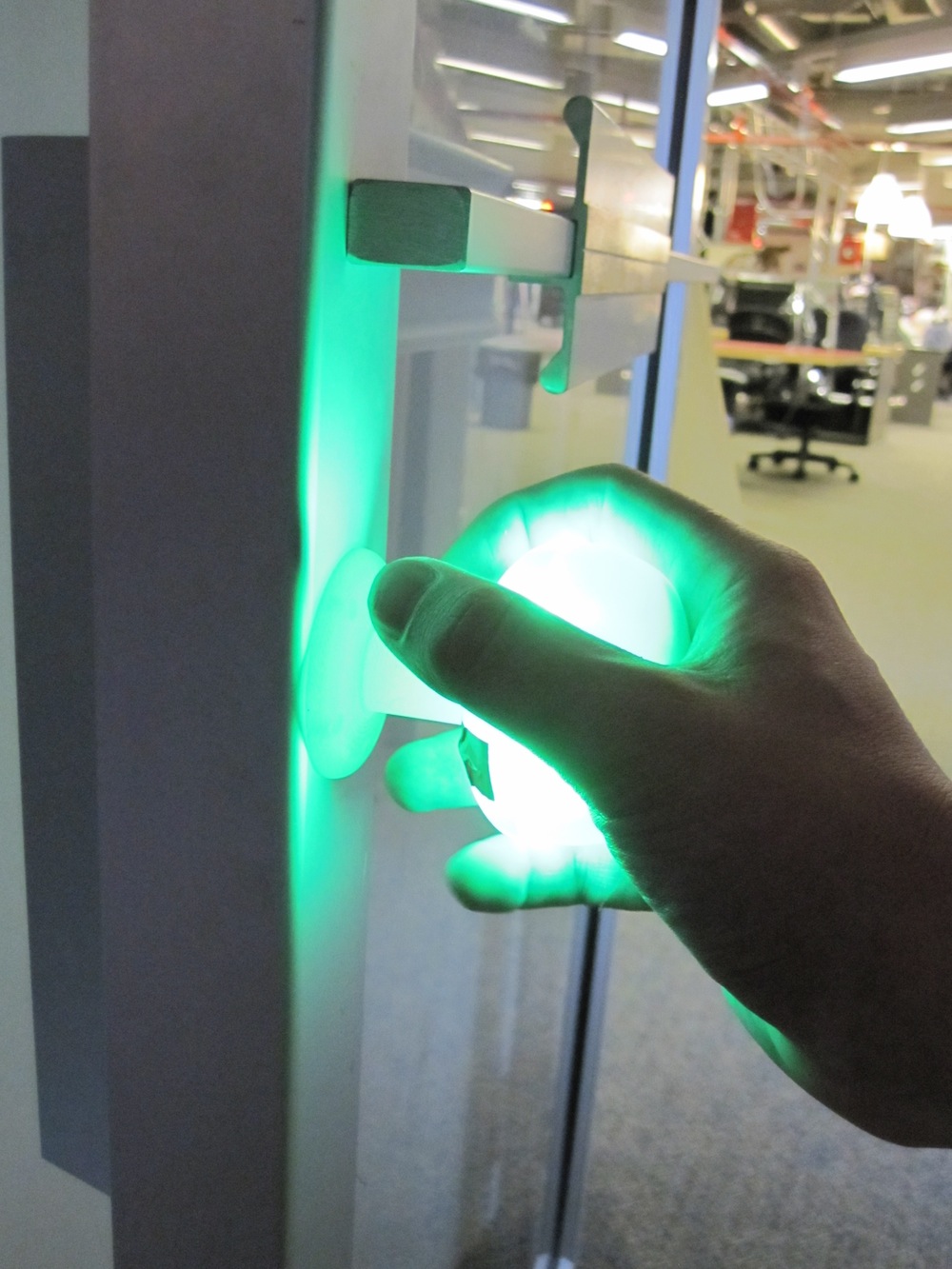

Intelliknob (2010)

David Cranor This doorknob has capacitive touch sensors on its backside so that it can sense the grasp of the user. In addition to being inherently more secure to simple “looking over the shoulder” attacks than a standard door-access keypad by virtue of the concealed location of the touch sensors, the important thing to notice about the method of interaction with the doorknob is that although the added functionality provided by the touch sensors facilitate information transfer between the user and the locking mechanism of the door, it does not require the user to change their normal pattern of interacting with a normal doorknob.

This doorknob has capacitive touch sensors on its backside so that it can sense the grasp of the user. In addition to being inherently more secure to simple “looking over the shoulder” attacks than a standard door-access keypad by virtue of the concealed location of the touch sensors, the important thing to notice about the method of interaction with the doorknob is that although the added functionality provided by the touch sensors facilitate information transfer between the user and the locking mechanism of the door, it does not require the user to change their normal pattern of interacting with a normal doorknob.

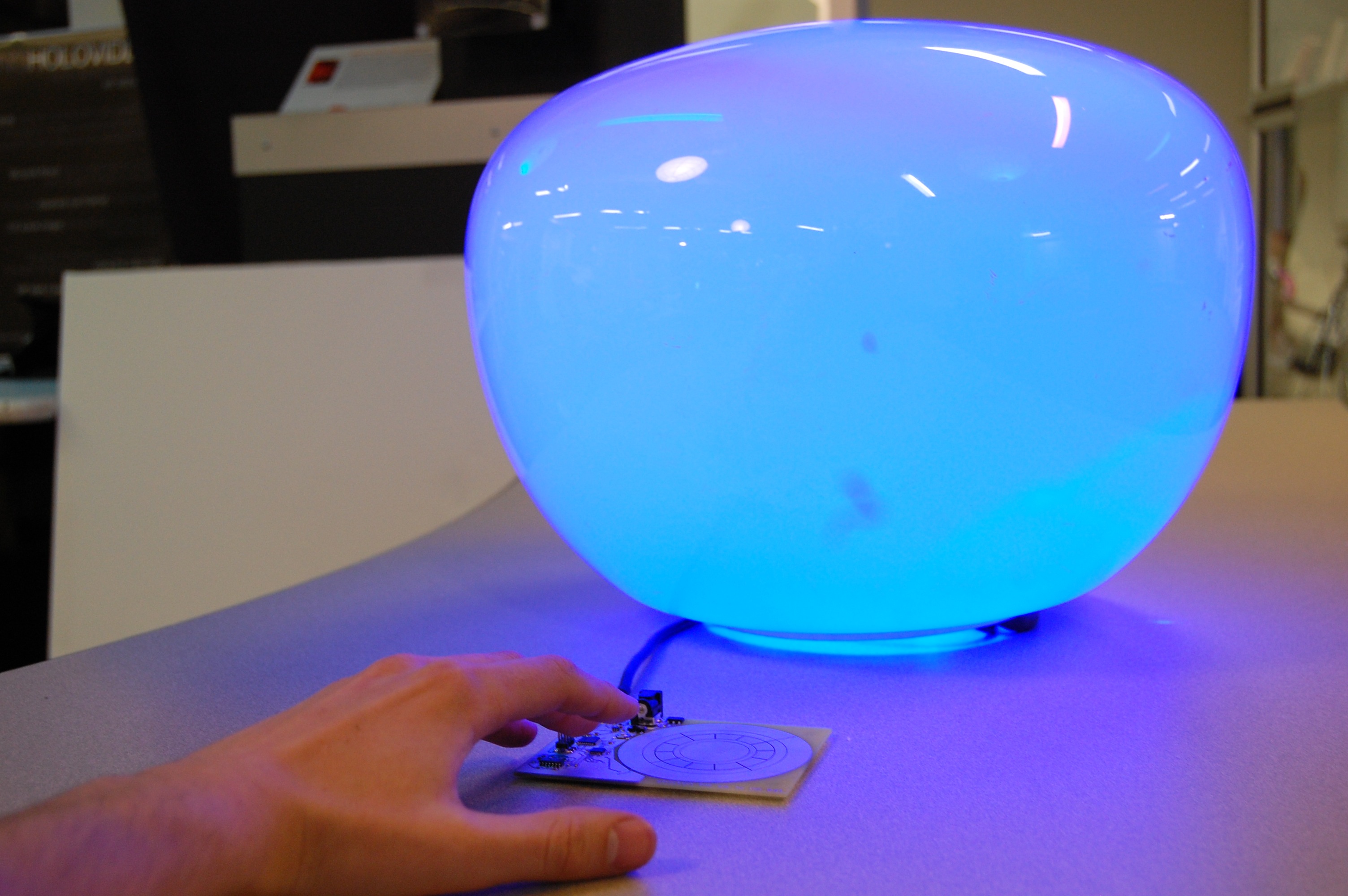

Magic Hands (2010)

David Cranor An assortment of everyday objects is given the ability to understand multitouch gestures of the sort used in mobile-device user interfaces, enabling people to use such increasingly familiar gestures to control a variety of objects and to “copy” and “paste” configurations and other information among them.

An assortment of everyday objects is given the ability to understand multitouch gestures of the sort used in mobile-device user interfaces, enabling people to use such increasingly familiar gestures to control a variety of objects and to “copy” and “paste” configurations and other information among them.

Thinner Client (2010)

David Cranor

Many more people in the world have access to television screens and mobile phone networks than full fledged desktop computers with broadband internet connections. We have created the Thinner Client as an exploration into the methodology of leveraging this pre-installed infrastructure in order to enable low-cost computing. The device uses a television as a display, connects to the Internet via a standard serial port and costs less than $10 US to produce.

Project Source and Board Design Files

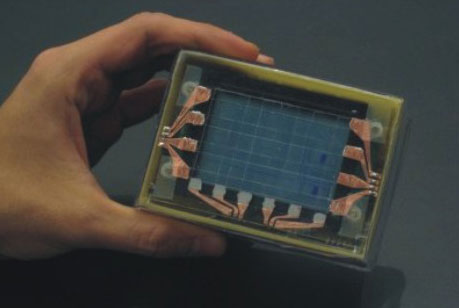

Graspables: The Bar of Soap (2009)

Brandon Taylor Grasp-based interfaces combine finger-touch pattern sensing with pattern recognition algorithms to provide interfaces that can “read the user’s mind.” As an example, the “Bar of Soap” is a hand-held device that can detect the finger-touch pattern on its surface and determine its desired operational mode (e.g. camera, phone, remote control, game) based on how the user is grasping it. We have also managed to fit the electronics into a baseball that can classify a pitch based on how the user is gripping the ball (which we are using as the input to a video game). Project video

Grasp-based interfaces combine finger-touch pattern sensing with pattern recognition algorithms to provide interfaces that can “read the user’s mind.” As an example, the “Bar of Soap” is a hand-held device that can detect the finger-touch pattern on its surface and determine its desired operational mode (e.g. camera, phone, remote control, game) based on how the user is grasping it. We have also managed to fit the electronics into a baseball that can classify a pitch based on how the user is gripping the ball (which we are using as the input to a video game). Project video

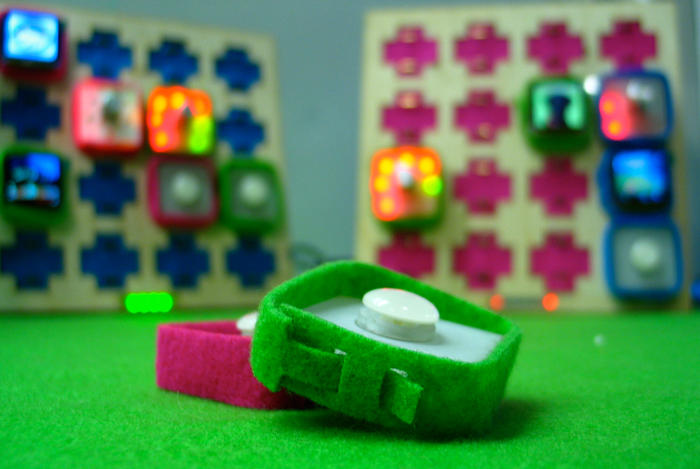

Connectibles (2008)

Jeevan Kalanithi The Connectibles system is a peer-to-peer, server-less social networking system implemented as a set of tangible, exchangeable tokens. The Sifteo cubes are an outgrowth of this work and the Siftables project from the Fluid Interfaces group. Project page

The Connectibles system is a peer-to-peer, server-less social networking system implemented as a set of tangible, exchangeable tokens. The Sifteo cubes are an outgrowth of this work and the Siftables project from the Fluid Interfaces group. Project page

BYOB (2005)

Gauri Nanda BYOB is a computationally enhanced modular textile system that makes available a new material from which to construct “smart” fabric objects (bags, furniture, clothing). The small modular elements are flexible, networked, input/output capable, and interlock with other modules in a reconfigurable way. The object built out of the elements is capable of communicating with people and other objects, and of responding to its environment. Project Web site

BYOB is a computationally enhanced modular textile system that makes available a new material from which to construct “smart” fabric objects (bags, furniture, clothing). The small modular elements are flexible, networked, input/output capable, and interlock with other modules in a reconfigurable way. The object built out of the elements is capable of communicating with people and other objects, and of responding to its environment. Project Web site

Clocky (2005)

Gauri Nanda As featured on Good Morning America, Jay Leno’s monologue, and in the comic strip Sylvia… Yes, we (Gauri, to be specific) were responsible for Clocky, the alarm clock that hides when the user presses the snooze button.

As featured on Good Morning America, Jay Leno’s monologue, and in the comic strip Sylvia… Yes, we (Gauri, to be specific) were responsible for Clocky, the alarm clock that hides when the user presses the snooze button.

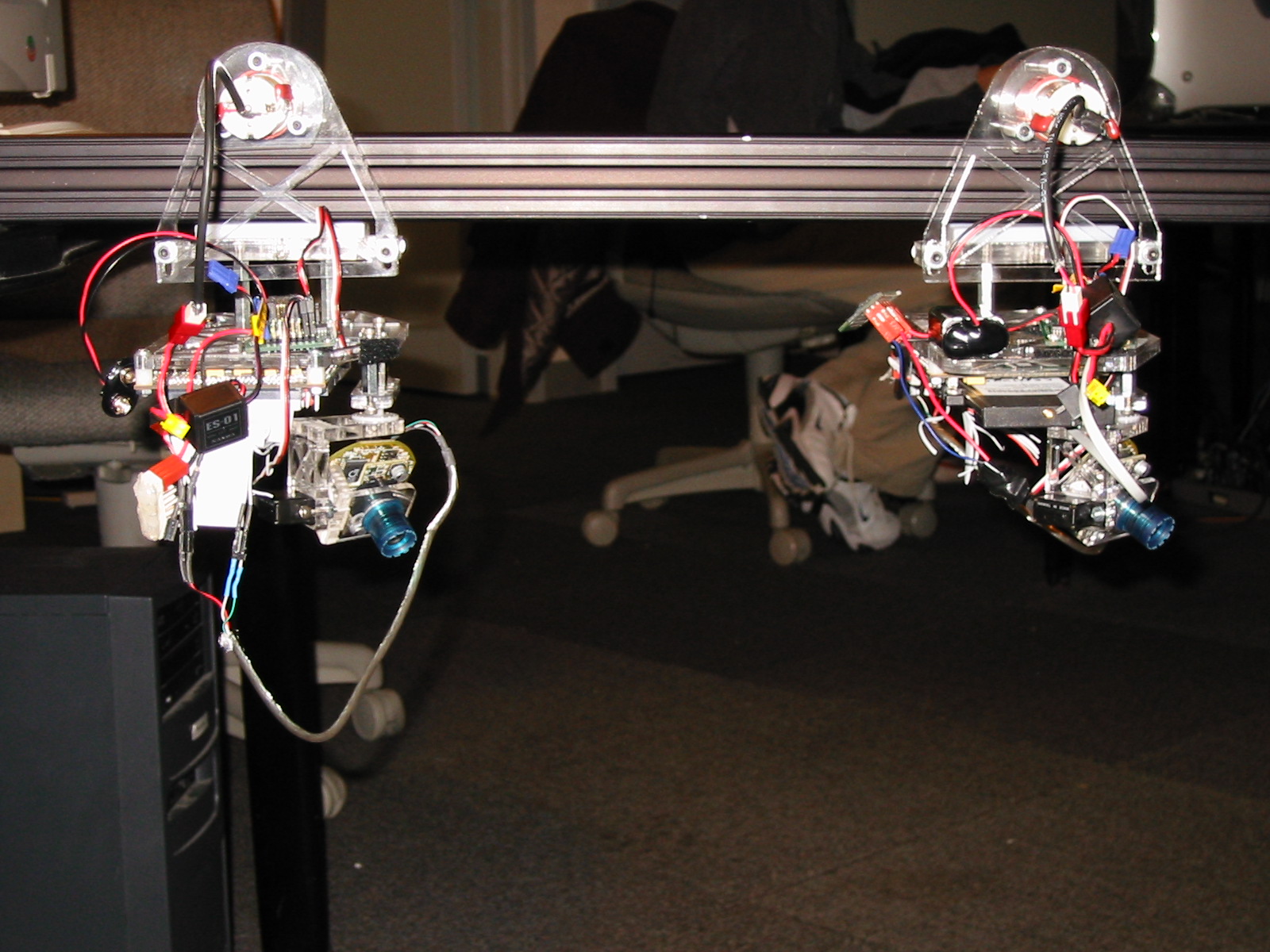

Collaborating Input-Output Ecosystems (2003-2006)

Jacky Mallett, Seongju Chang, Jim Barabas, Diane Hirsh, and Arnaud Pilpre

The Smart Architectural Surfaces system and the Eye Society mobile camera robots are two of the platforms we have developed for exploring group-forming protocols for self-organized collaborative problem solving by intelligent sensing devices. We have also used these platforms for experiments in ecosystems of networked consumer electronic products.

The Smart Architectural Surfaces system and the Eye Society mobile camera robots are two of the platforms we have developed for exploring group-forming protocols for self-organized collaborative problem solving by intelligent sensing devices. We have also used these platforms for experiments in ecosystems of networked consumer electronic products.

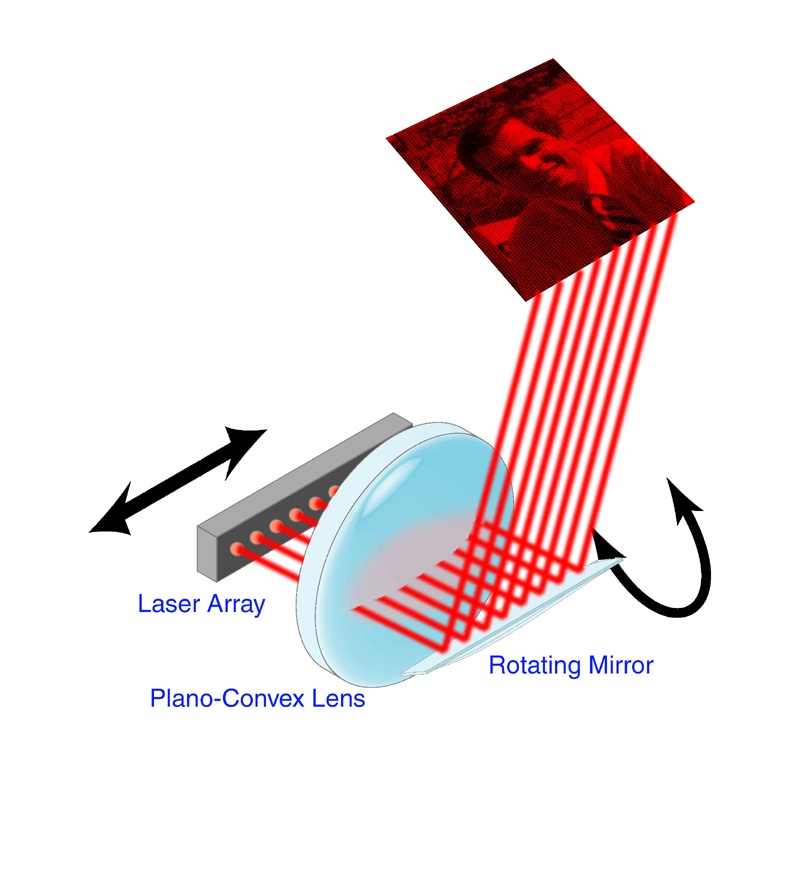

Personal Projection (2003)

Wilfrido Sierra The Personal Projection project is looking to add video projection capabilities to very small devices without adding appreciably to their cost, form factor, or power consumption. The projector is based on a monolithic array of VCSELs.

The Personal Projection project is looking to add video projection capabilities to very small devices without adding appreciably to their cost, form factor, or power consumption. The projector is based on a monolithic array of VCSELs.

Isis (1996-2001)

Stefan Agamanolis Named after the Egyptian goddess of fertility, Isis is tailored in a number of ways — both in syntax and in internal operation — to support the development of demanding responsive media applications. Isis is a “lean and mean” programming environment, appropriate for research and laboratory situations. Isis software libraries strive to follow a “multilevel” design strategy, consisting of multiple interoperable layers that each offer a different level of abstraction of a particular kind of functionality but that also use the same core language elements as a basis. The small yet complete syntax fosters collaboration by lessening the burden on novices while still allowing experienced programmers to take full advantage of their skills. Isis also provides an efficient mechanism for extending functionality by accessing software libraries written in other languages. The Isis Web site gives a complete manual on the language, and more importantly it links to many different projects that use Isis. Isis is now available for free download under the GNU GPL (though certain libraries and applications are made available only to our sponsors and collaborators).

Named after the Egyptian goddess of fertility, Isis is tailored in a number of ways — both in syntax and in internal operation — to support the development of demanding responsive media applications. Isis is a “lean and mean” programming environment, appropriate for research and laboratory situations. Isis software libraries strive to follow a “multilevel” design strategy, consisting of multiple interoperable layers that each offer a different level of abstraction of a particular kind of functionality but that also use the same core language elements as a basis. The small yet complete syntax fosters collaboration by lessening the burden on novices while still allowing experienced programmers to take full advantage of their skills. Isis also provides an efficient mechanism for extending functionality by accessing software libraries written in other languages. The Isis Web site gives a complete manual on the language, and more importantly it links to many different projects that use Isis. Isis is now available for free download under the GNU GPL (though certain libraries and applications are made available only to our sponsors and collaborators).

Three of our best-known Isis projects were HyperSoap (a TV soap opera with hyperlinked objects), Reflection of Presence (a telecollaboration system that segmented participants from their backgrounds and placed them in a gesture-controlled shared space), and iCom (an awareness and communication portal that linked labs spaces in the MIT Media Lab, Media Lab Europe and other locations)

Cheops (1991-2003)

John Watlington and V. Michael Bove, Jr. The Cheops Imaging Systems were compact and inexpensive self-scheduling dataflow supercomputers that we developed for experiments in super-HDTV, interactive object-based video, and real-time computation of holograms. A Cheops system ran the Mark II holographic video display for over ten years before its retirement. Read more about Cheops

The Cheops Imaging Systems were compact and inexpensive self-scheduling dataflow supercomputers that we developed for experiments in super-HDTV, interactive object-based video, and real-time computation of holograms. A Cheops system ran the Mark II holographic video display for over ten years before its retirement. Read more about Cheops